Our creative systems have helped generate over £400M+ in revenue for DTC brands over the past few years. Over those years, staying agile has been one of the main constants. The Meta landscape has changed drastically over the past 5 years, and 2025 is no different.

The rules of paid social have changed - again.

Meta’s new Andromeda engine has replaced tidy, rule-based targeting with a hungry, probabilistic system that lives on fresh data and limitless creative options. It can trial 5,000 ads a week, not 50, which means the brands that win are the ones that feed it a steady diet of diverse, insight-driven assets - and they do it fast.

This guide distils everything you need to thrive in that reality.

Inside, you’ll learn how to generate strategic creative volume, test with surgical precision, and scale winners without wasting budget. Whether you’re running £500 a day or £50k, you’ll get concrete frameworks, checklists, and campaign architectures that turn chaos into disciplined experimentation.

Over the past few years, Meta has been shifting away from hands on in the account towards automation. From the early days of automation with CBOs, all the way through to their newest iteration of Advantage+ dubbed Meta Andromeda.

.png)

Meta’s Andromeda update has fundamentally shifted creative testing dynamics. It requires a strategic volume-based approach over traditional concept isolation, it can now support 5000 ads a week, rather than 50. The brands that understand this and cater to Andromeda’s needs will be the brands that win on Meta.

Ultimately, performance now depends on strategic volume, speed, and iteration of creative. You need to focus on creating a high volume of content that appeals to distinctly different audience personas and preferences.

“Soul-mate theory” is just shorthand for Andromeda’s goal: every ad impression should feel as if the advertiser crafted that exact image and line of copy specifically for the person viewing it, and the system now has the scale and smarts to try.

You should now operate under the theory that there is a perfect creative for each user. Instead of “Which audience fits this ad?”, the question becomes “Which specific ad version will resonate most with this single user right now?”

But what does that actually mean in this context?

The algorithm behaved like a rulebook.

If a user matched certain parameters (e.g., location, age, interest in “running shoes”), the system would follow a fixed chain of logic to choose which ad to show.

Every time the same inputs came in, it produced the same output.

This meant Meta was limited, it was:

The algorithm now behaves like a statistician + gambler + learner.

It doesn’t just look at hard rules; it weighs probabilities that a given ad will perform best based on vast, shifting signals: behaviour, history, context, creative, time of day, etc.

Two identical users might now see different ads, because the system is optimising based on the likelihood of engagement or conversion, not fixed logic.

This creates an algorithm that is:

Moving from deterministic to probabilistic means the ad-picking brain went from rigid “if-this-then-that” rules to a smart, ever-learning “best-guess” approach. Instead of guaranteeing the same ad sequence every time, it now plays the odds. Your customers get ads that feel more relevant, and you see better results, and the algorithm gets smarter.

Anyone who is involved with growing an e-com brand with Meta needs to understand this:

You need a high volume of distinctly different assets across all stages of awareness phases, underpinned by the psychological messaging frameworks that drive your core customers to convert. By doing this, you are more likely to serve the right ads, to the right people, at the right time.

When it comes to performance creative, “just make something that looks good” doesn’t cut it. Every ad you put out into the world is a chance to gather data - to learn what resonates, what converts, and what drives action etc. The best creatives aren’t just built to impress; they’re built to test. You should be dedicating 20% of your time to big swing concepts and 80% to making incremental progress through iteration.

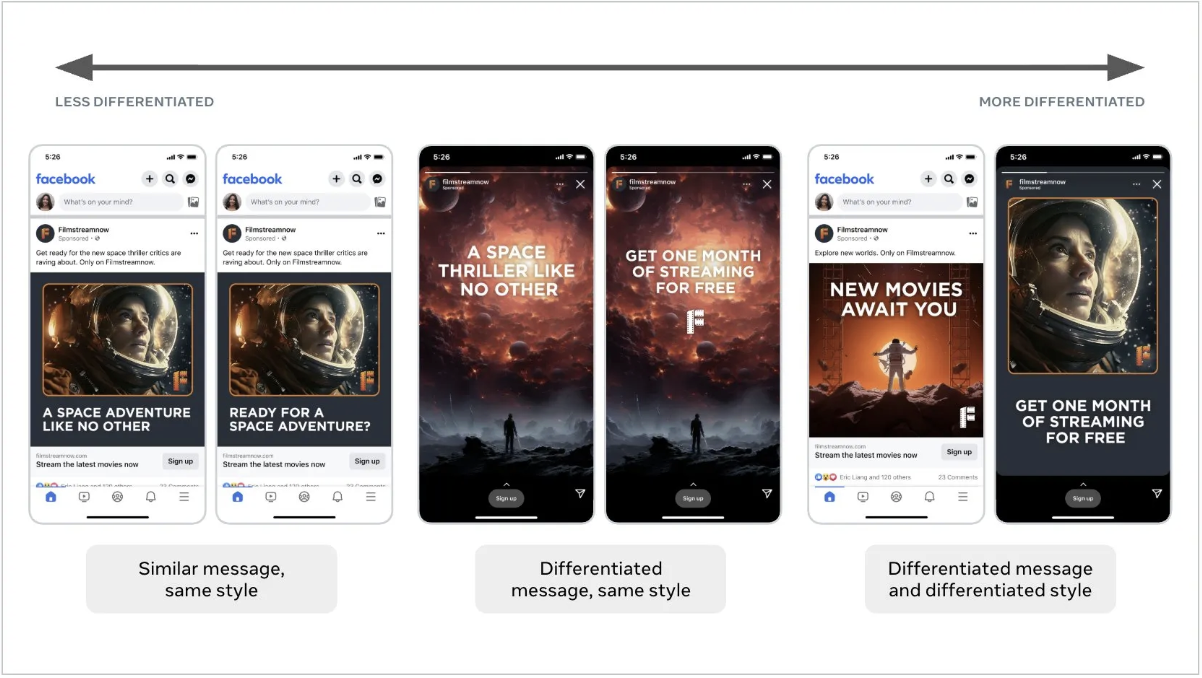

Meta doesn’t just want more ads - it wants different ads.

When you design with testing in mind, you're not just guessing your way to a winning ad. You’re creating a clear path to get there faster using hypothesis-driven thinking, repeatable systems, and smart tweaks that let the data guide the next round.

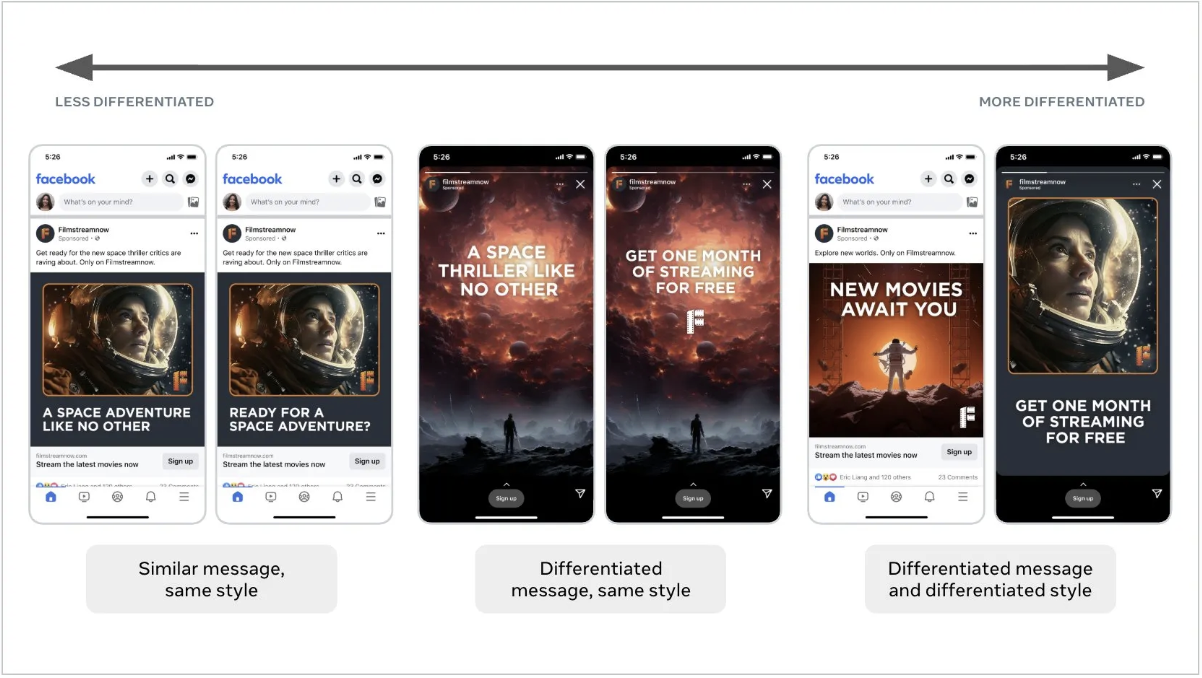

With Meta’s Andromeda update, creative diversification is no longer optional. Ads that look too similar now get grouped and penalised - meaning that iteration must be about variation, not just minor tweaks

This section breaks down exactly what that looks like, including how to bake experimentation into the bones of your creative process.

Before you even think about shooting or designing anything, you should be thinking like a strategist.

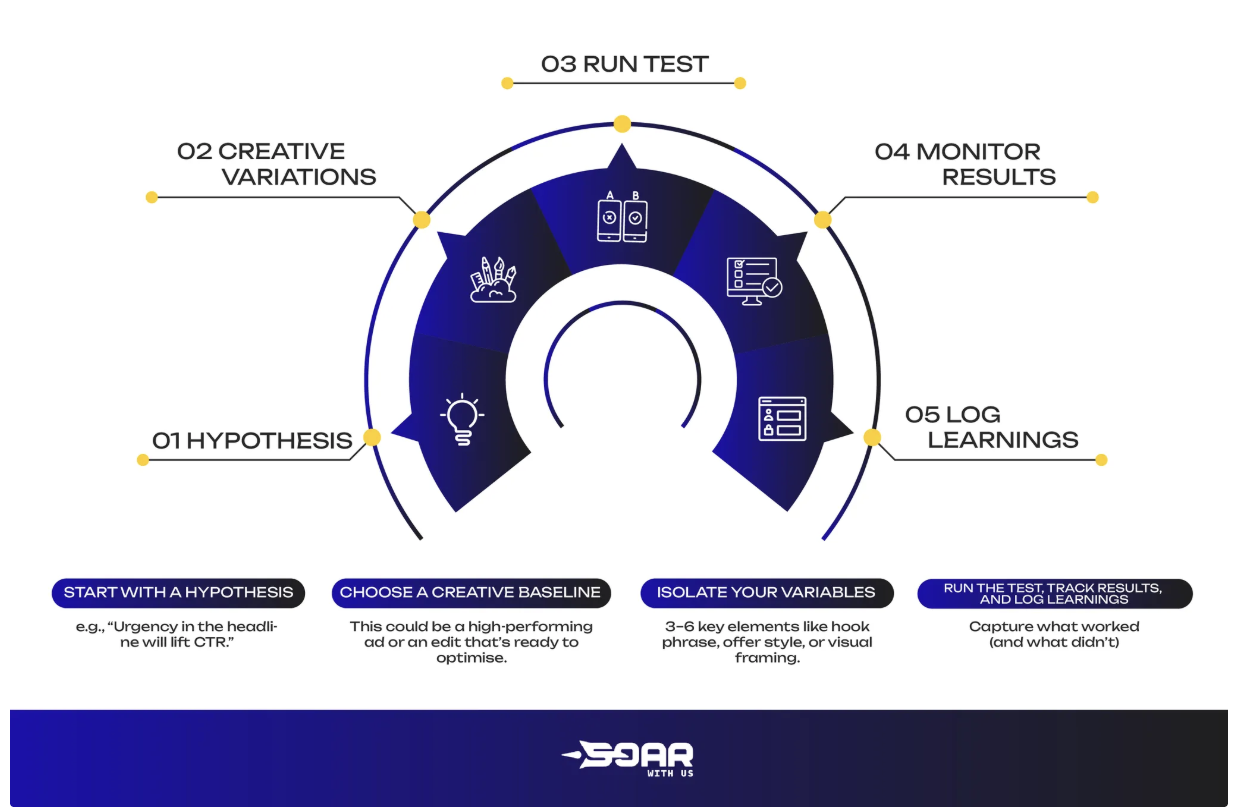

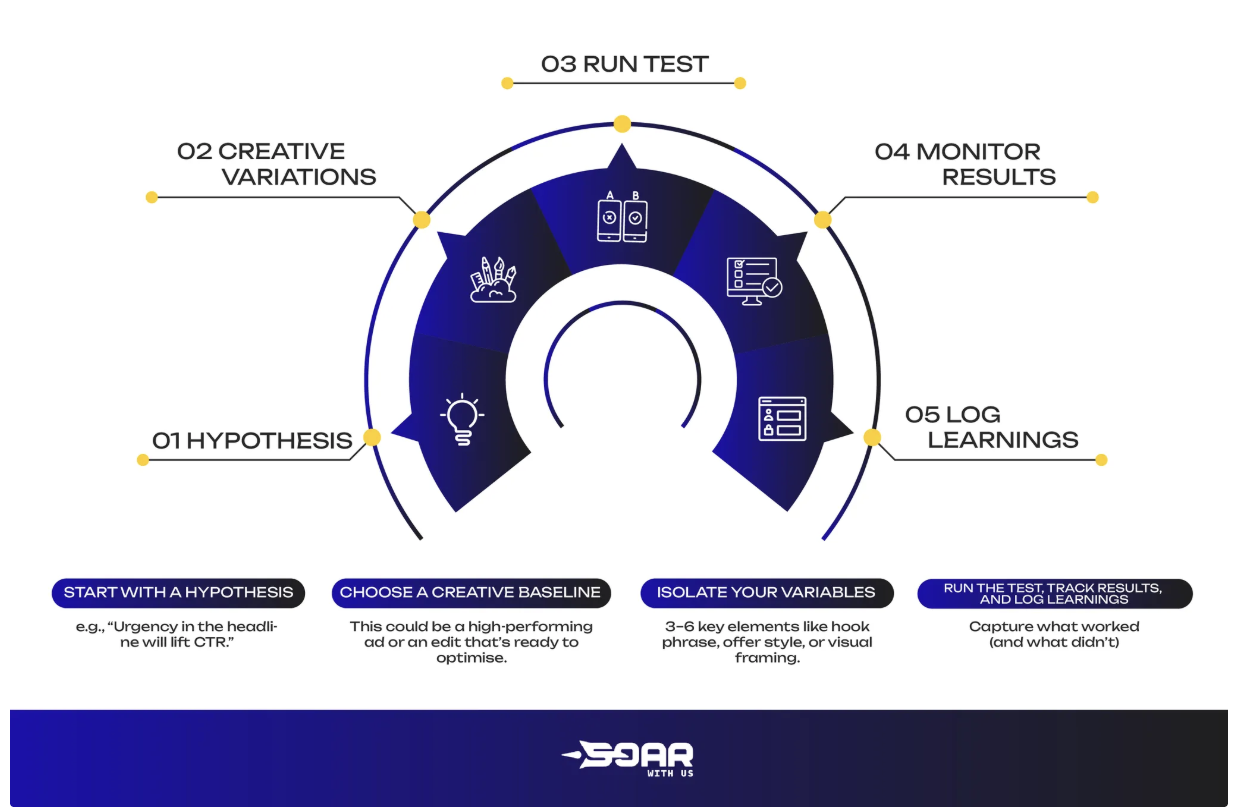

Here’s the testing method we use:

1. Start with a hypothesis: e.g., “Urgency in the headline will lift CTR.”

2.Choose a creative baseline: Identify a top-performing ad concept to optimise. For example, a POV style video, before & after.

3. Isolate your variables: 3–6 key elements like the hook, creator, visuals, and format.

4. Run the test, track results, and log learnings: Capture what worked (and what didn’t) in your learnings tracker.

5: Diversify Beyond Similarity: Variations need to meaningfully differ in look, feel, or motivator - not just copy. Treat the first 3 seconds as a key variable as Meta uses this to determine similarity.

When we say "create with testing in mind," we mean that every decision you make, from headline to voiceover tone, should double as a potential test. What learnings can you gather from each creative decision that you make?

Your hook isn’t just the words. It’s the visual moment that defines whether your ad is treated as new. Meta now groups ads as ‘the same’ if the opening scene looks similar, even if the script changes. That means testing hooks must include visual swings - different settings, creators, and styles - not just different lines

Here’s an example of how tests used to be run…

This leaned into making small changes that focused on:

But these would all be flagged by Meta as looking too similar.

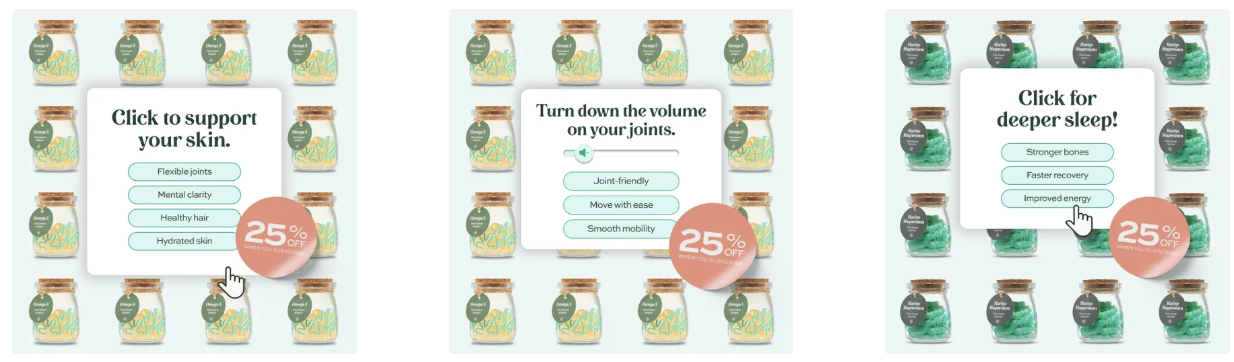

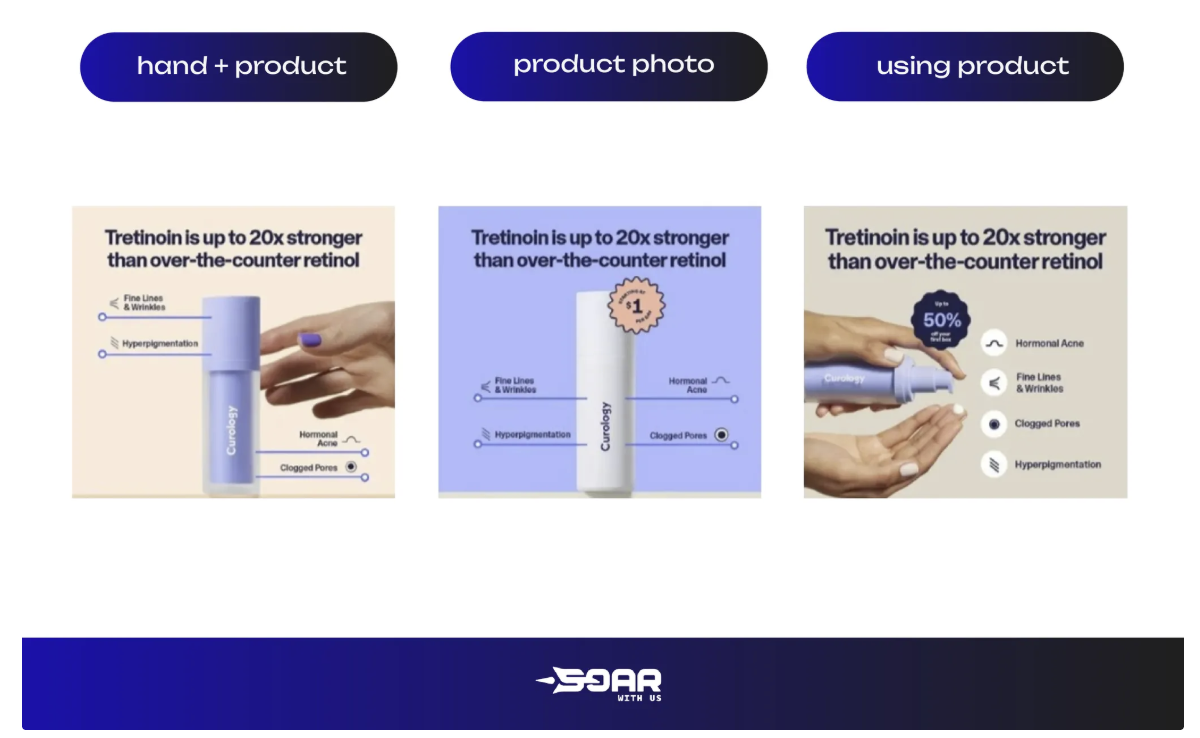

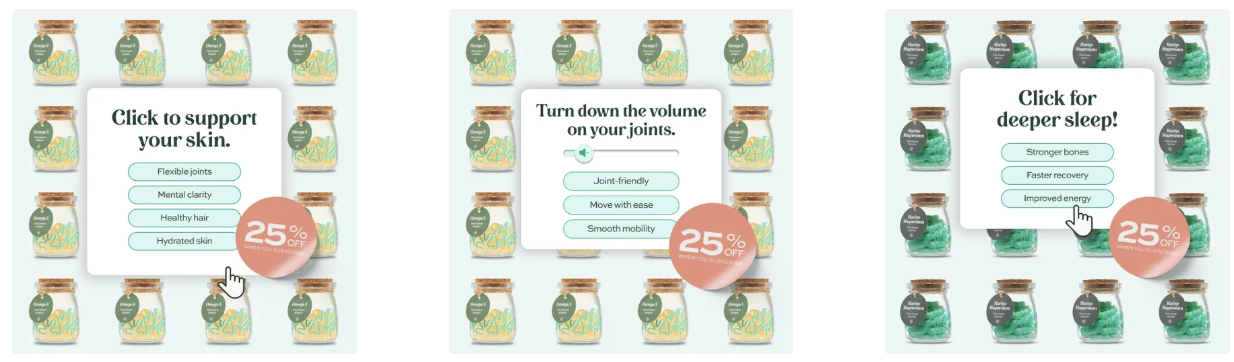

This is what Meta sees as differentiated - while they’re all static examples, the messaging and visuals are completely different.

Going forward:

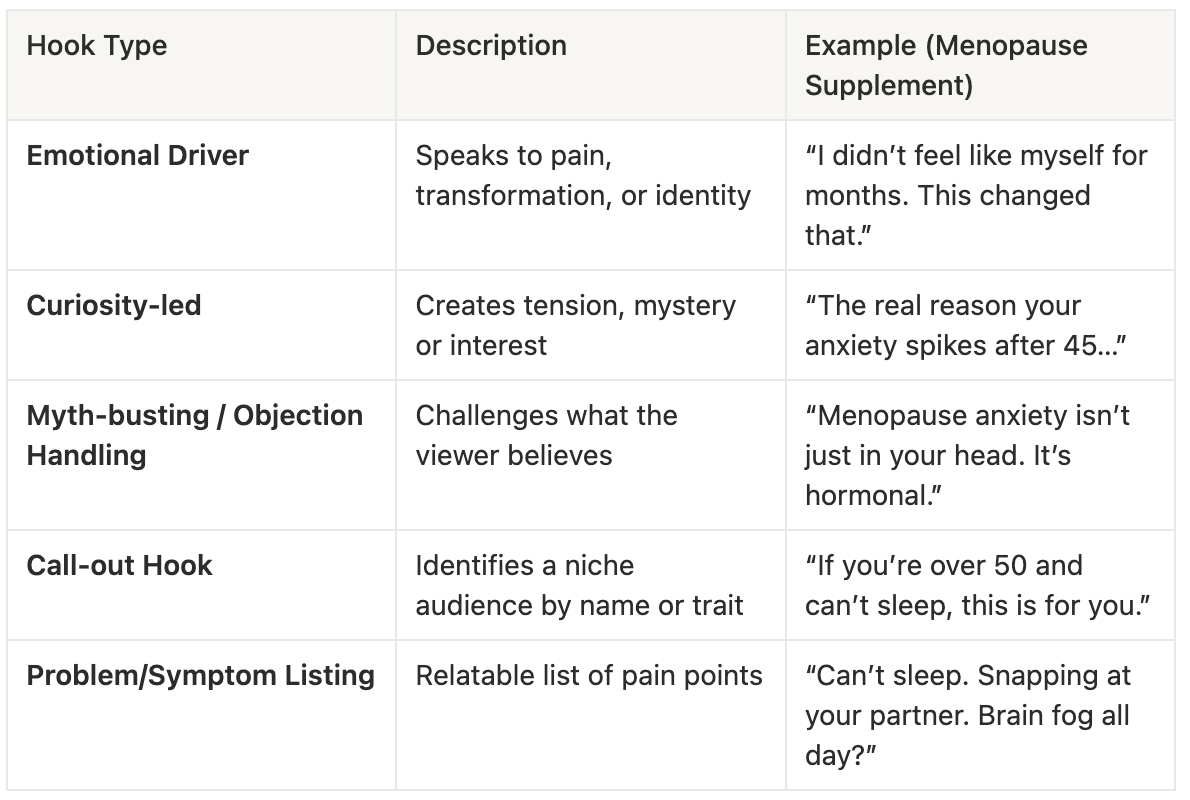

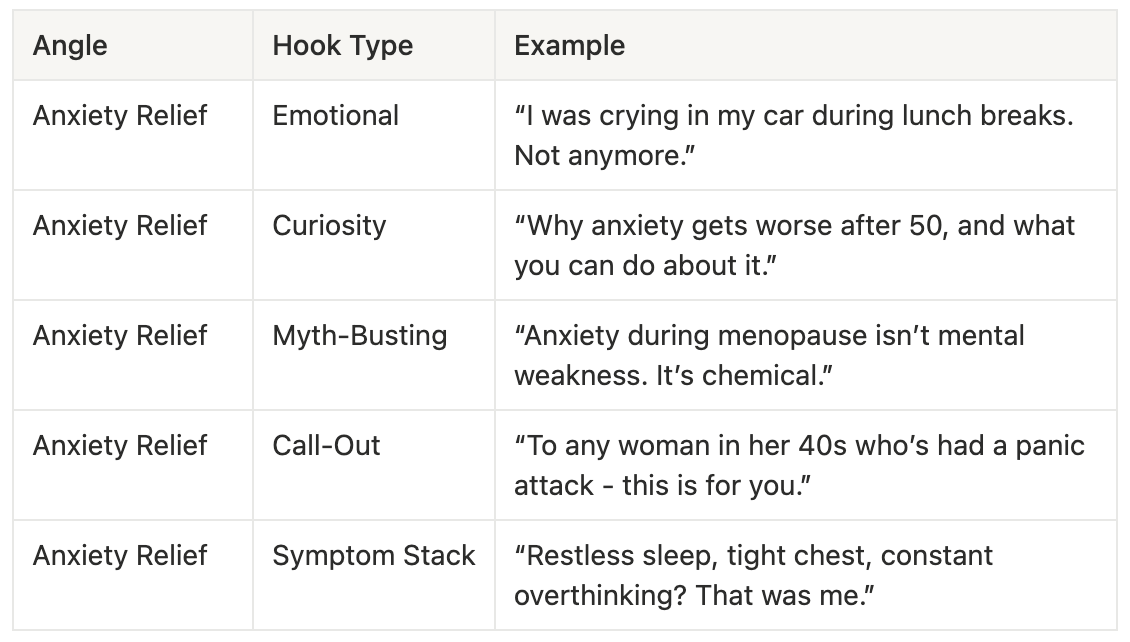

Then there’s angle testing, essentially, the main story or focus of your ad. You can also dial deeper into pain point specificity. Take menopause, for instance, that’s a broad category. But zoom in, and you’ll find very different resonant messages: “brain fog,” “joint pain,” “wrinkles.”

These micro-pain points can each become their own testable angle, each appealing to a slightly different subset of your audience.

That granularity often unlocks a stronger emotional connection and audience segments you might not have known existed without testing.

Angle testing isn’t just about the story you tell - it’s about who you’re telling it to. Meta rewards creative built for different personas. That means pairing each angle with a distinct visual and tone, so the ad not only resonates with a new motivator but also qualifies as a unique concept

Once you’ve found a winning angle/messaging?

Take that top-performing messaging and angles, but change the format and visuals in a way that makes it VERY different and therefore delivers to a different individual who might like to consume in a different way than the original message was delivered.

For example…

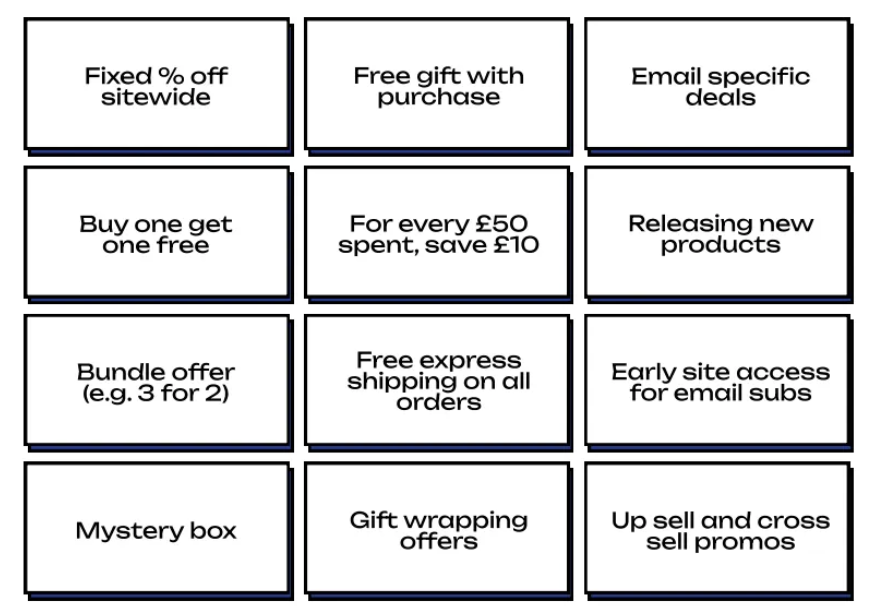

One of the most effective tests you can run is on your offer positioning. Sometimes a small tweak in copy can completely change how compelling it feels.

You might test:

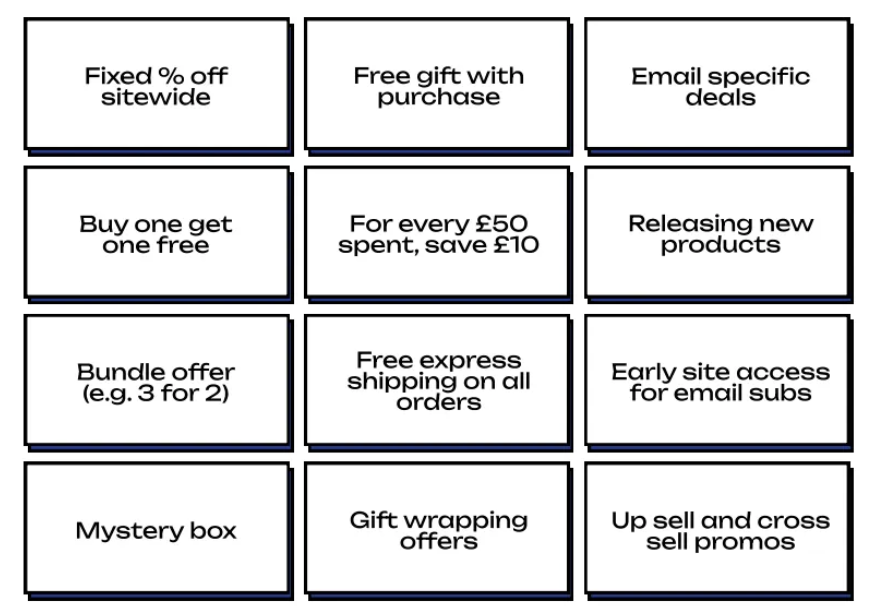

Here’s a bunch of offers you can test to see what resonates most with your audience.

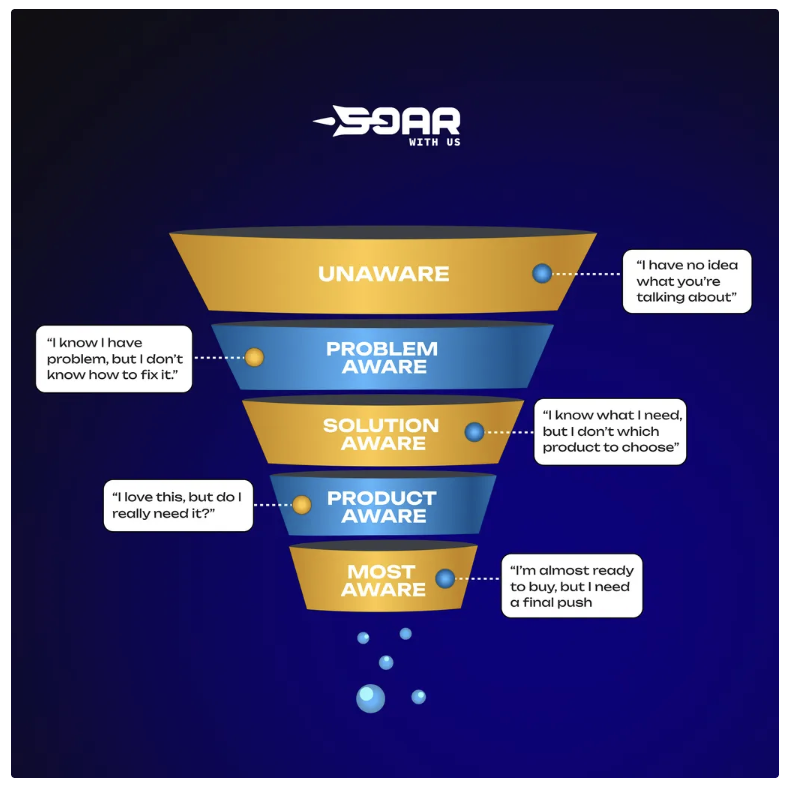

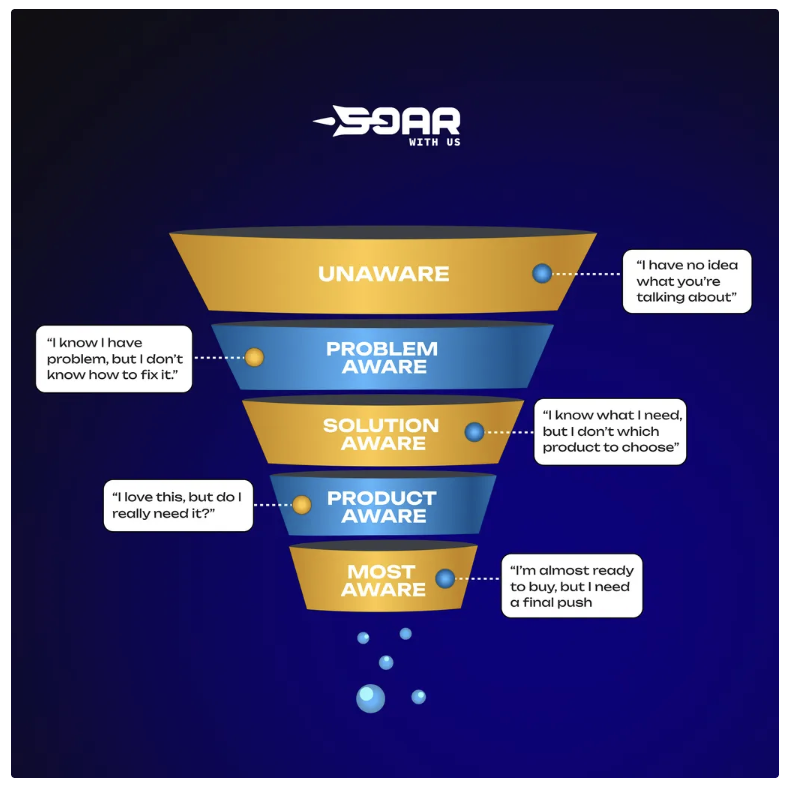

Awareness-Level Messaging: Speak to Where They Are

People interact with your ad differently depending on how aware they are of their problem or your product. Your messaging should reflect that.

Let’s say we’re promoting a gut health supplement:

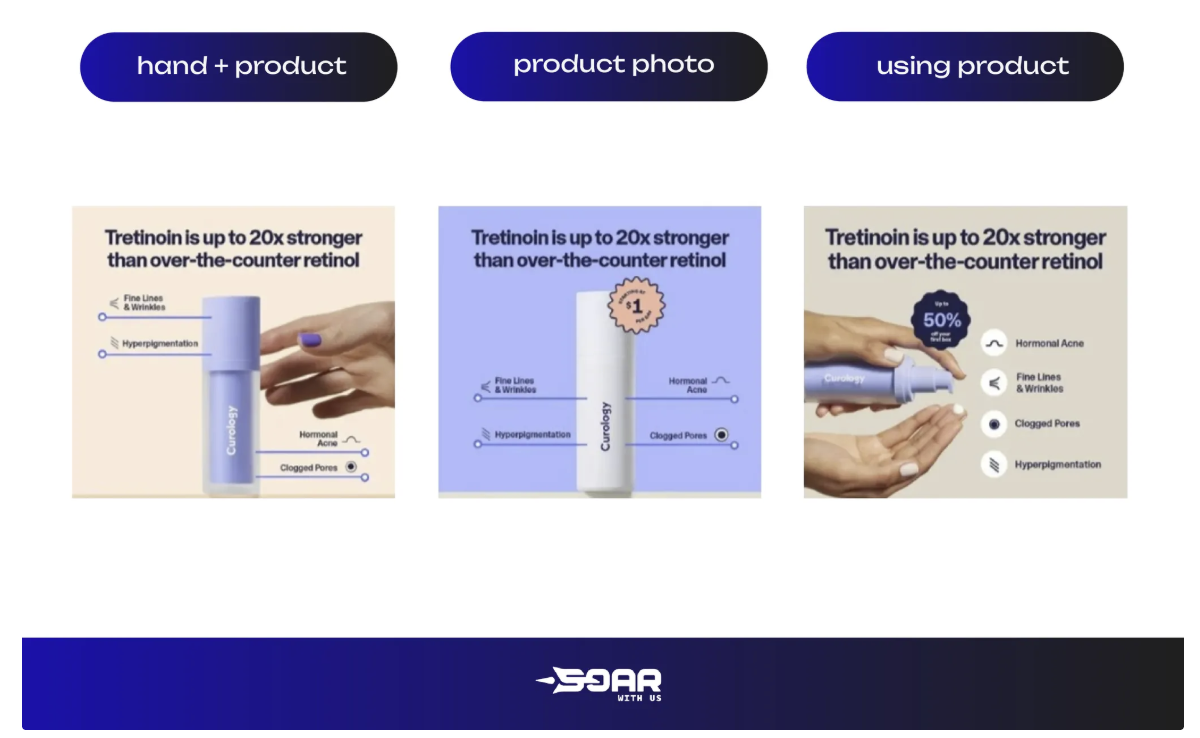

Visual Testing: Change the Frame

The words can stay the same, but the visuals? A major lever. Even subtle shifts in setting, model, or colour palette can make or break performance.

Test visuals like:

Every product has multiple value propositions, but which one resonates most?

Try framing like:

Also test how you present that social proof:

Creative diversification has shifted from a nice-to-have to a growth essential. Ads that look too similar - especially in the first 3 seconds - are grouped, capped, and penalised.

That means testing is no longer just about iterations on a theme, but about building genuinely different concepts that speak to new people.

Here’s how to diversify in practice:

To Wrap It Up...

Creative testing used to be about more ads. Today, it’s about different ads.

Scaling in Meta isn’t about guesswork or re-skinning winners anymore. It’s about building with diversification baked in:

The ads that scale longest and cost least are the ones Meta sees as unique concepts and your audience sees as personally relevant.

When planning briefs, ask yourself:

If you’re serious about building scalable, high-performing ads, your creative should be designed for it.

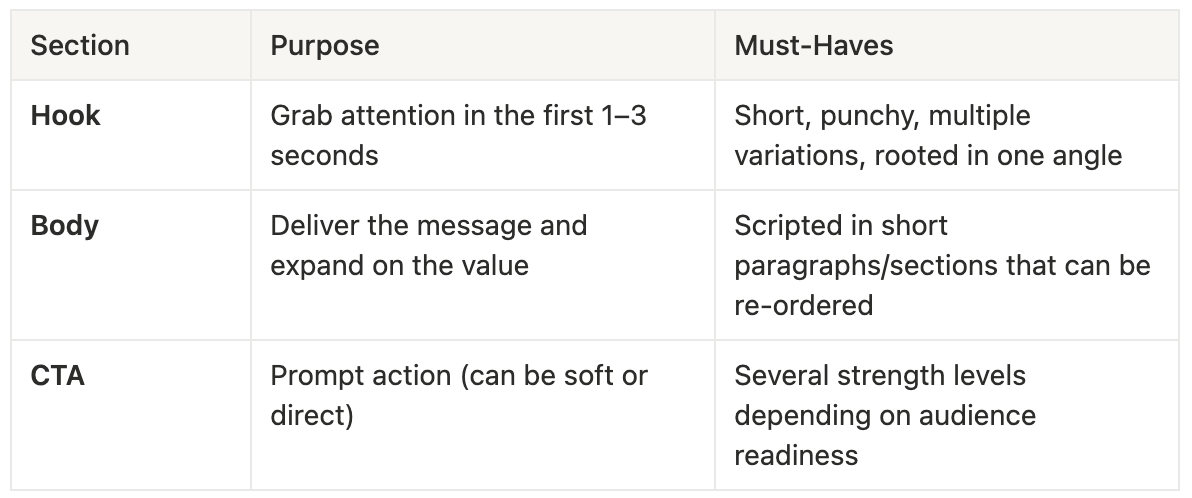

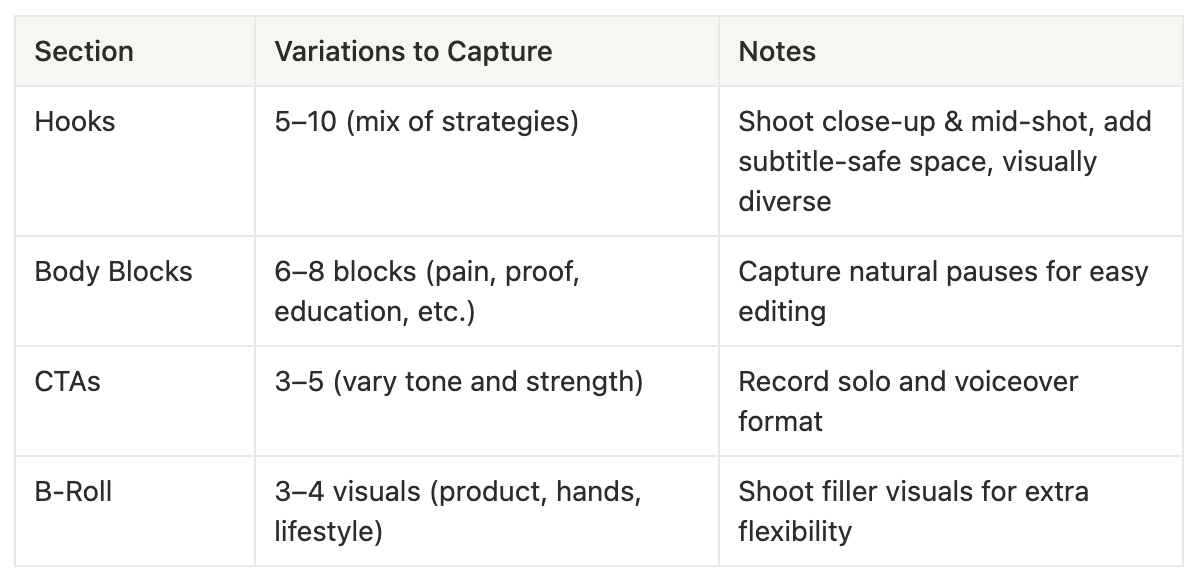

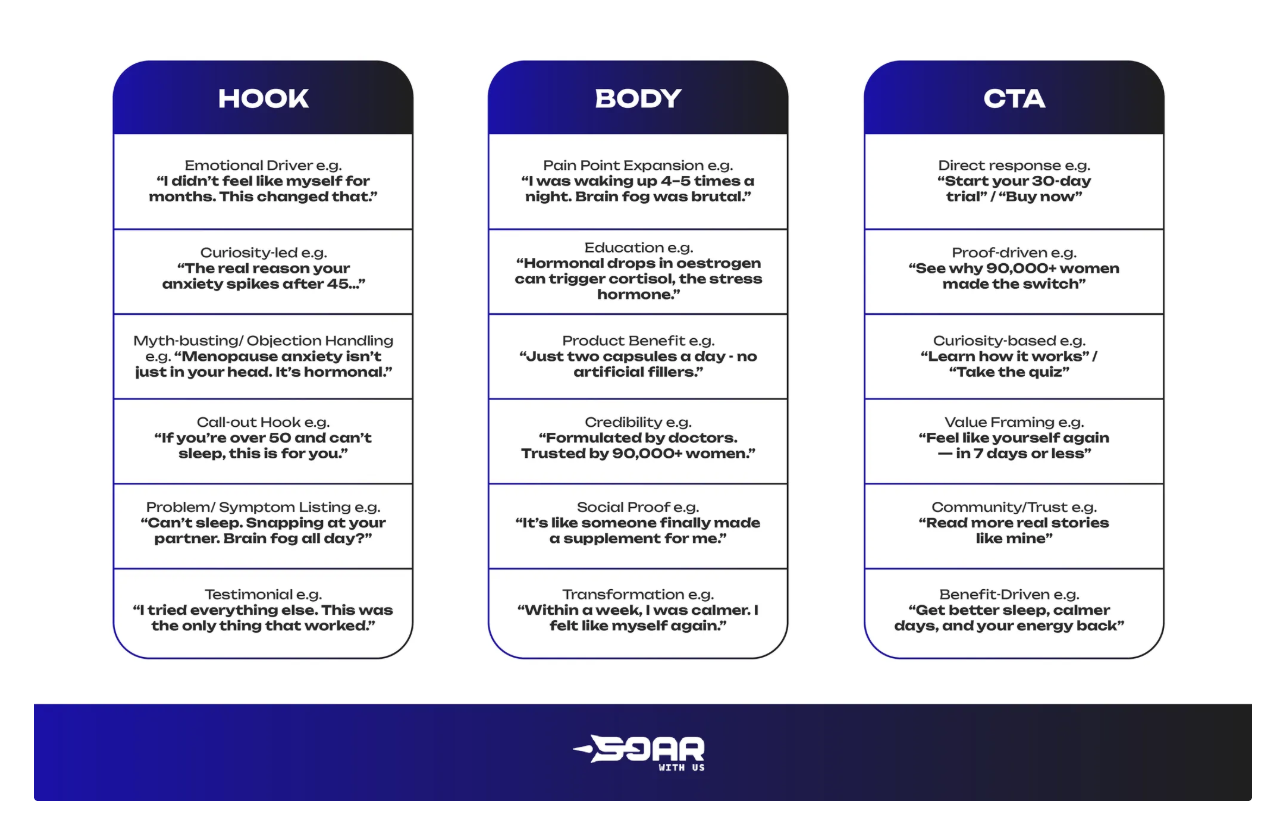

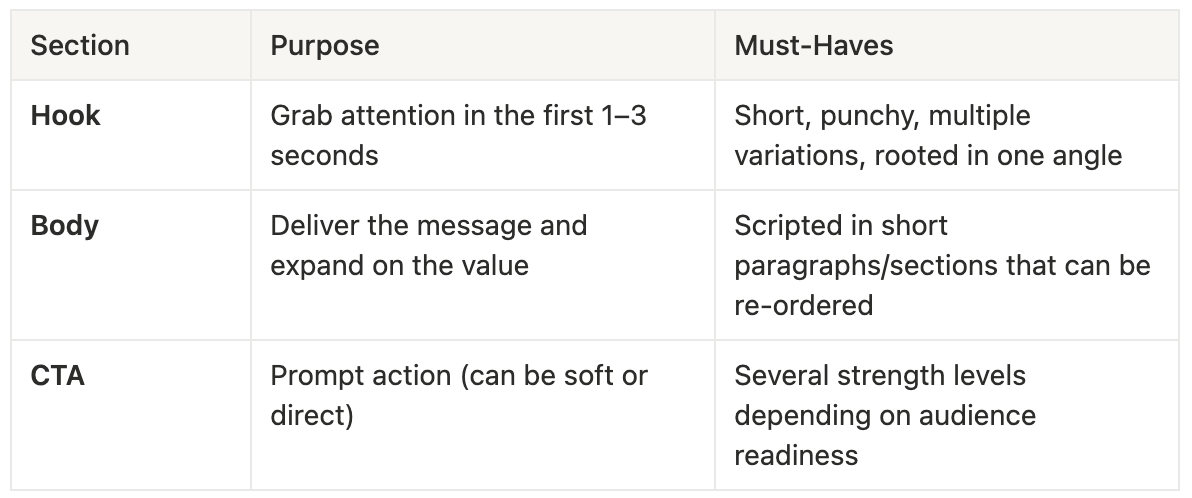

That means structuring, scripting and shooting content in a way that allows you to pull it apart, reassemble it, and test different combinations of hooks, bodies, and CTAs without having to reshoot everything from scratch.

In this section, we’ll break down how to approach creative architecture in a test-friendly way.

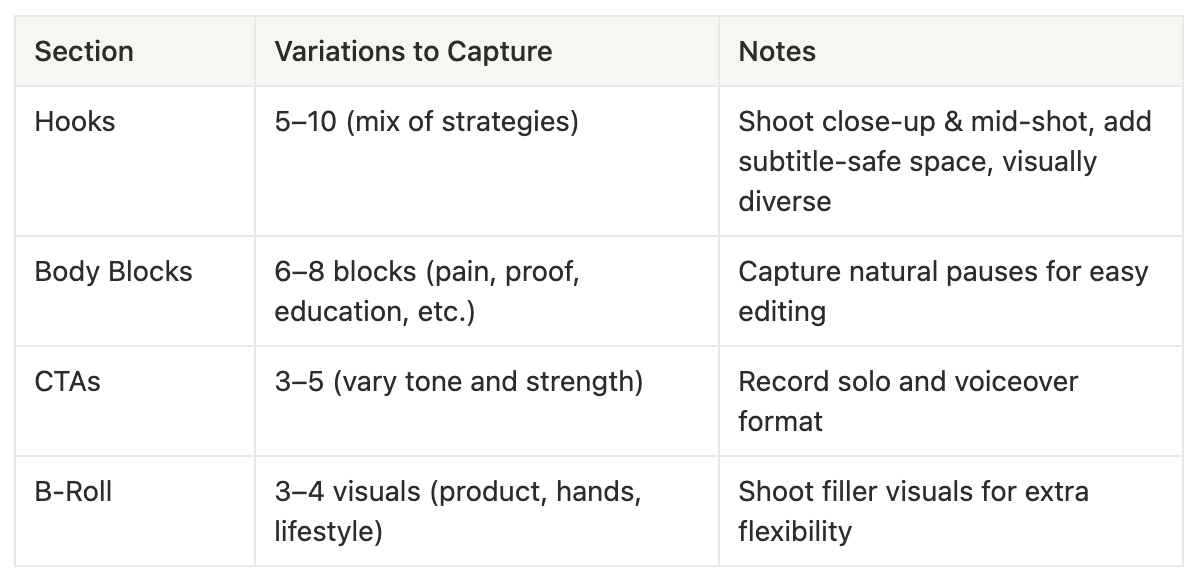

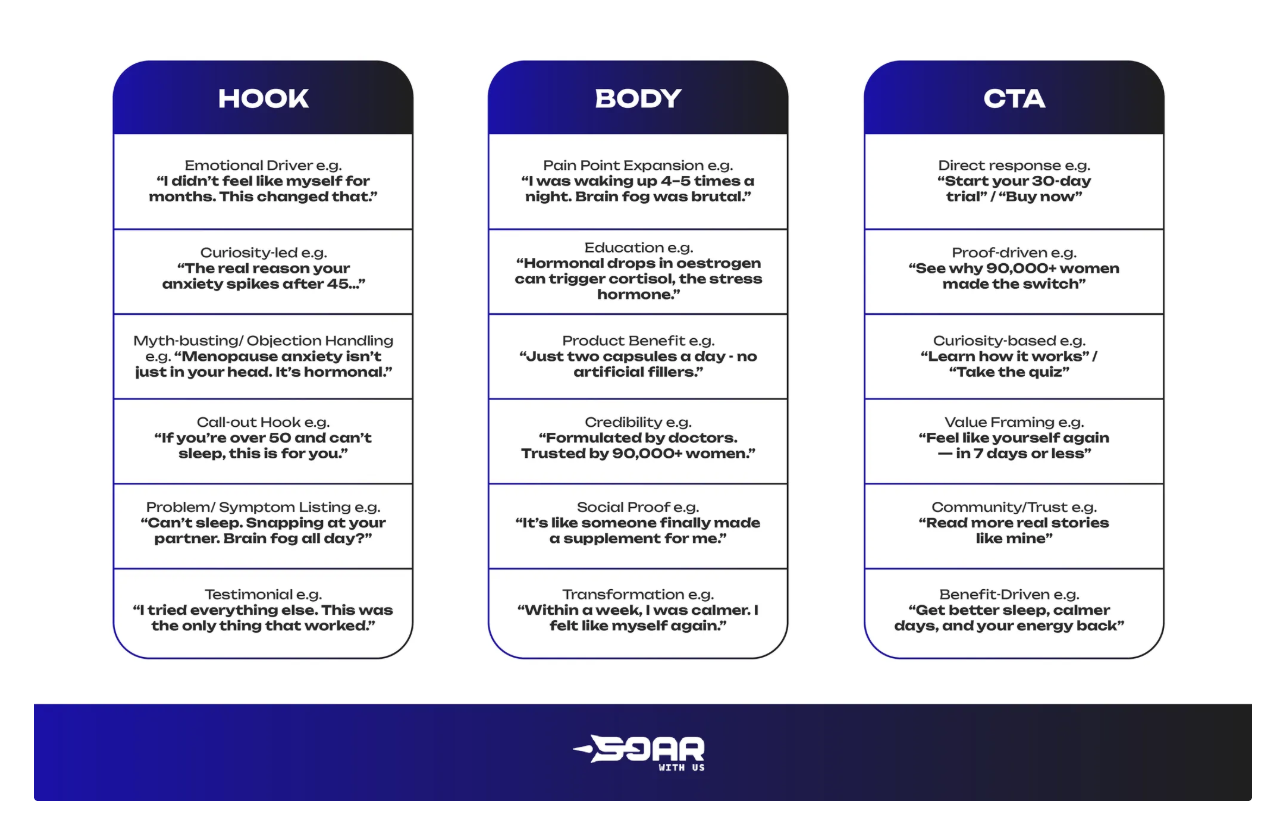

Your goal is to script and shoot in three interchangeable sections:

Each section should be written with 3–5 variations that hit the same angle from different emotional or messaging styles.

Let’s walk through how to approach each one in granular detail.

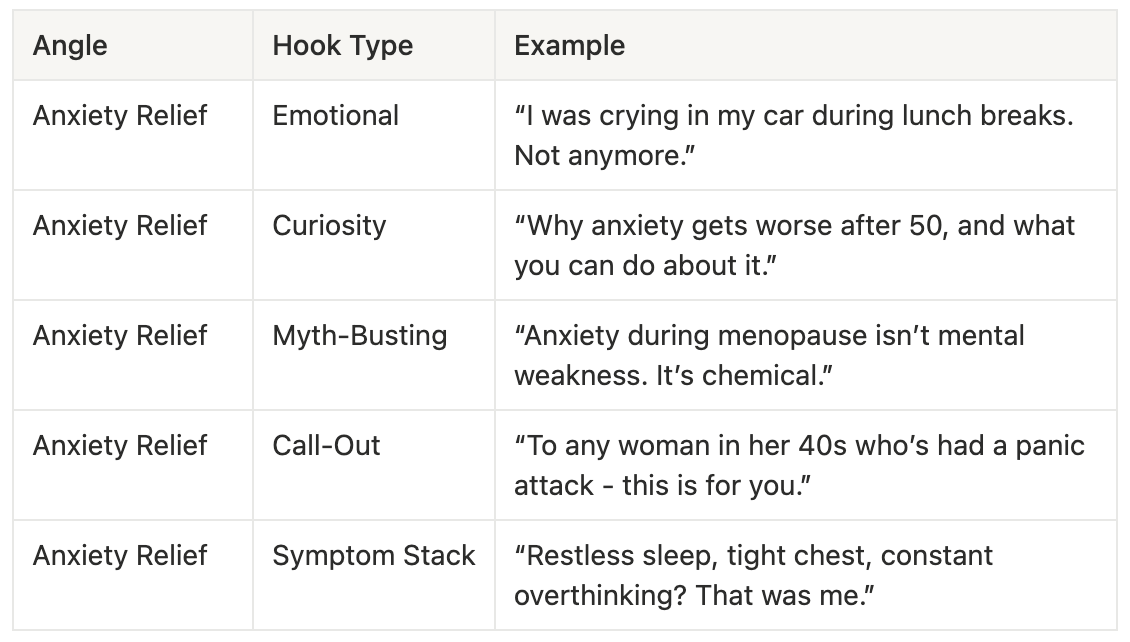

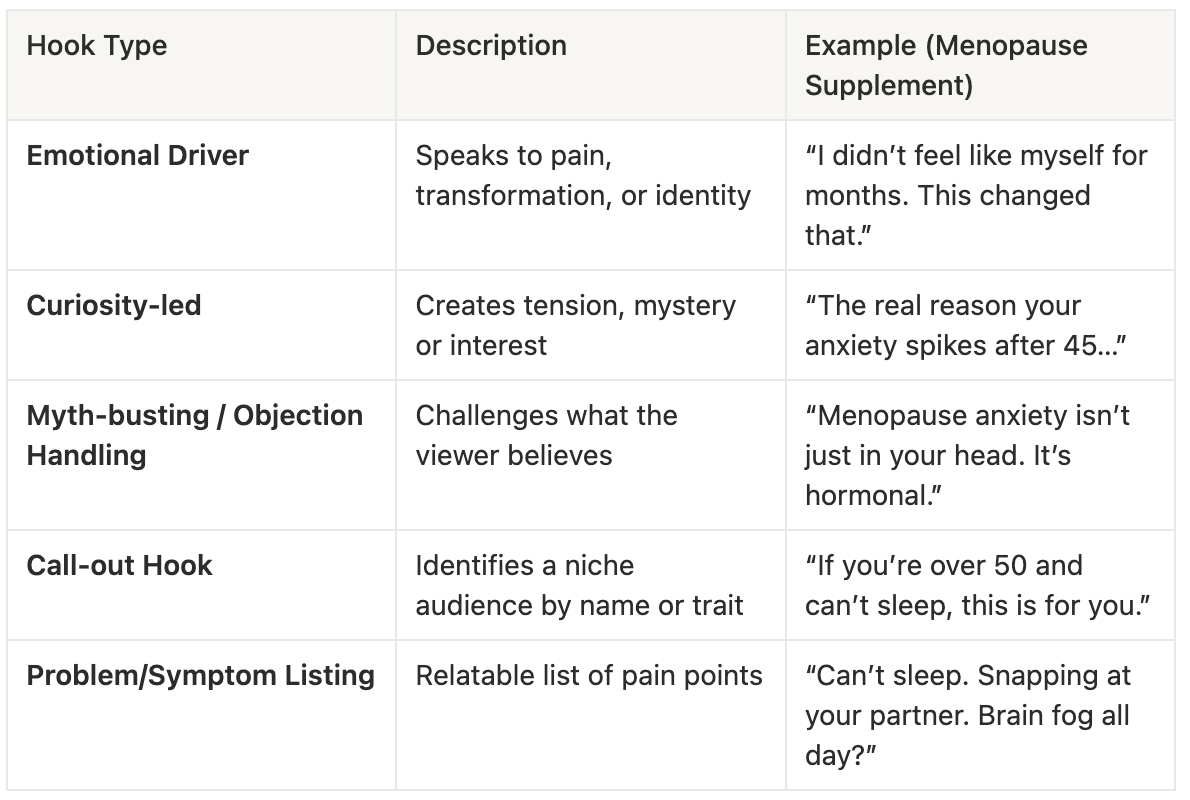

Hooks are arguably the number one most important part of an ad.

They are your scroll-stopper. And your ad should never rely on just one.

Instead, script hooks across different strategy types with different angles and formats.

For example:

🎬 Tip: Record hooks separately from the body. Keep them short (1–2 lines), and shoot with different tone options, or different creators which may resonate with different audiences.

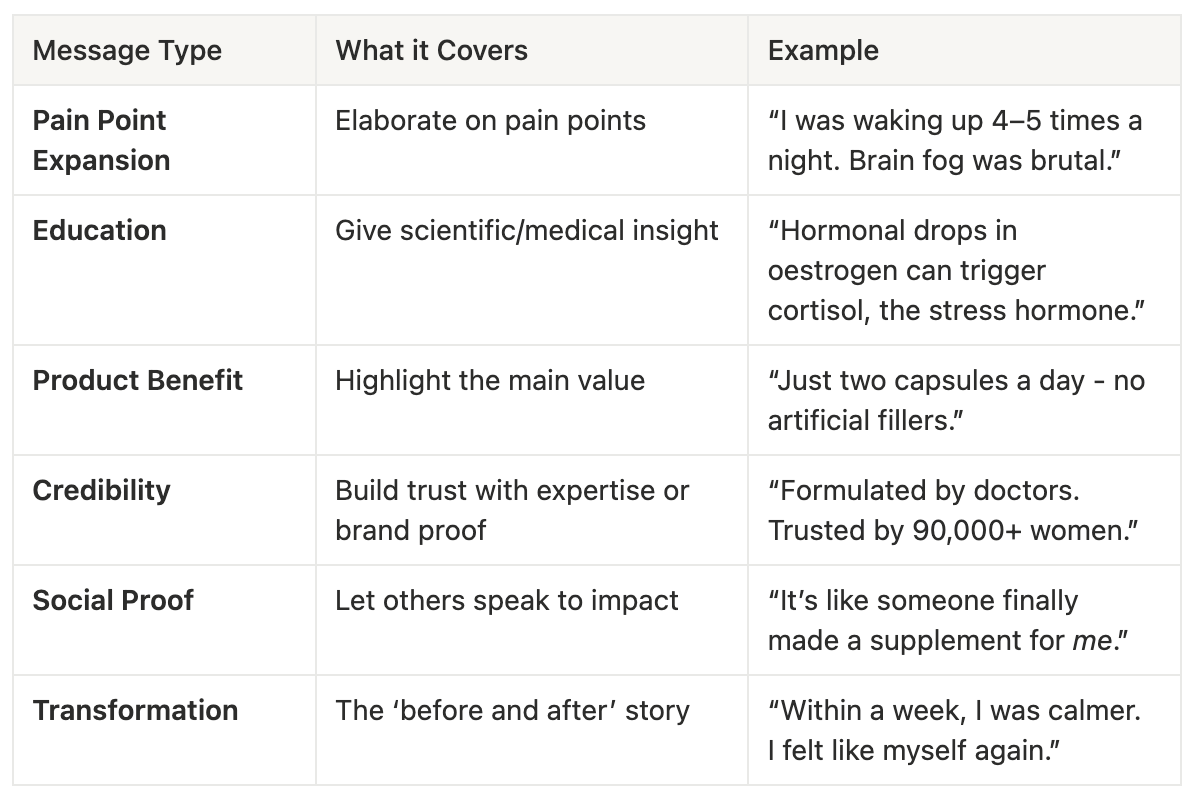

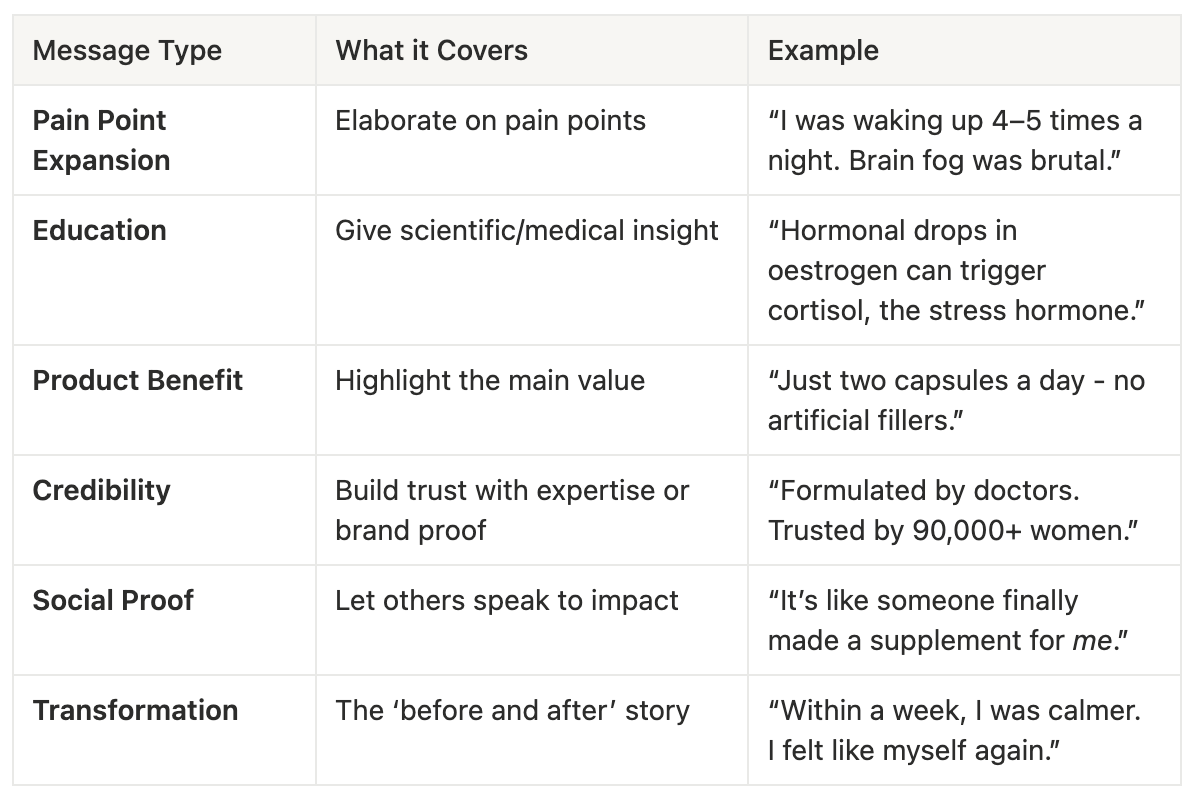

Once you’ve got their attention, the body of the ad needs to deliver the story. But the key is: write and shoot the body in testable blocks, not one flowing monologue.

Each “block” should be:

Think of these as plug-and-play building bricks that can be reordered or swapped based on the hook or CTA.

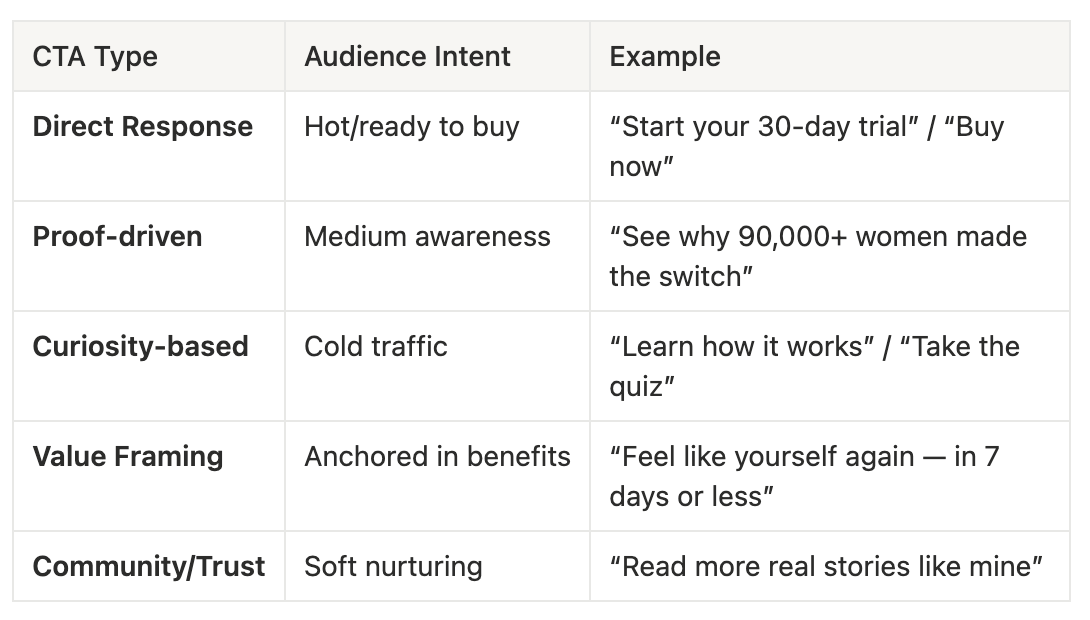

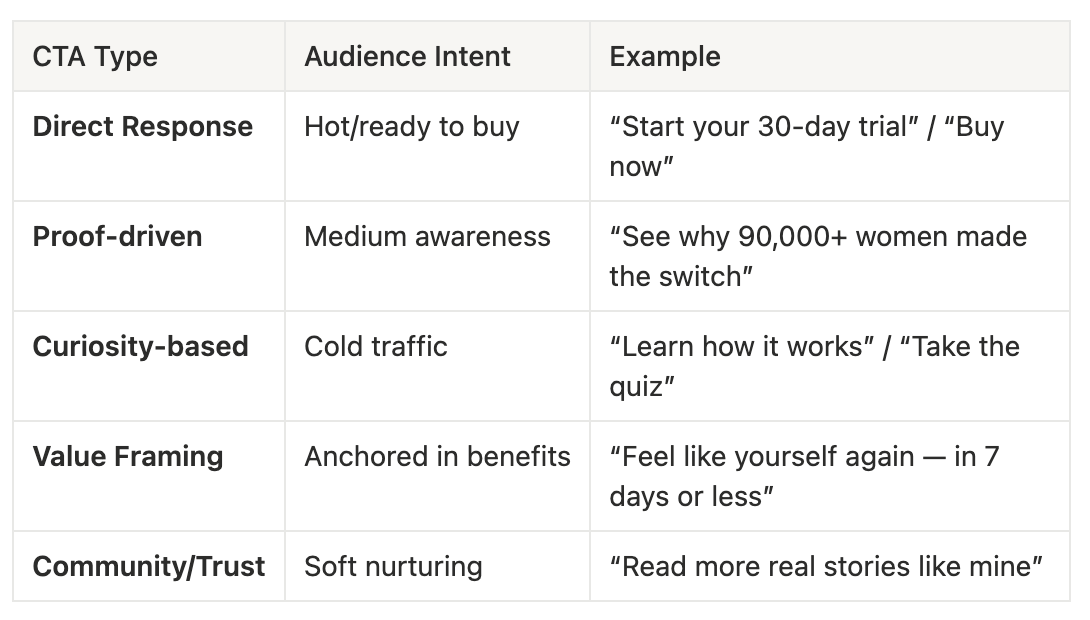

A good CTA is not always “Buy now.” You need to match audience intent and awareness level…

Here’s a template shooting structure you can copy and adapt for any campaign

If you walk away with one insight from this section, let it be this:

Don’t build ads. Build toolkits.

The highest-performing brands don’t rely on a perfect 60-second ad. They build modular, flexible, testable creative systems.

Now you can too!

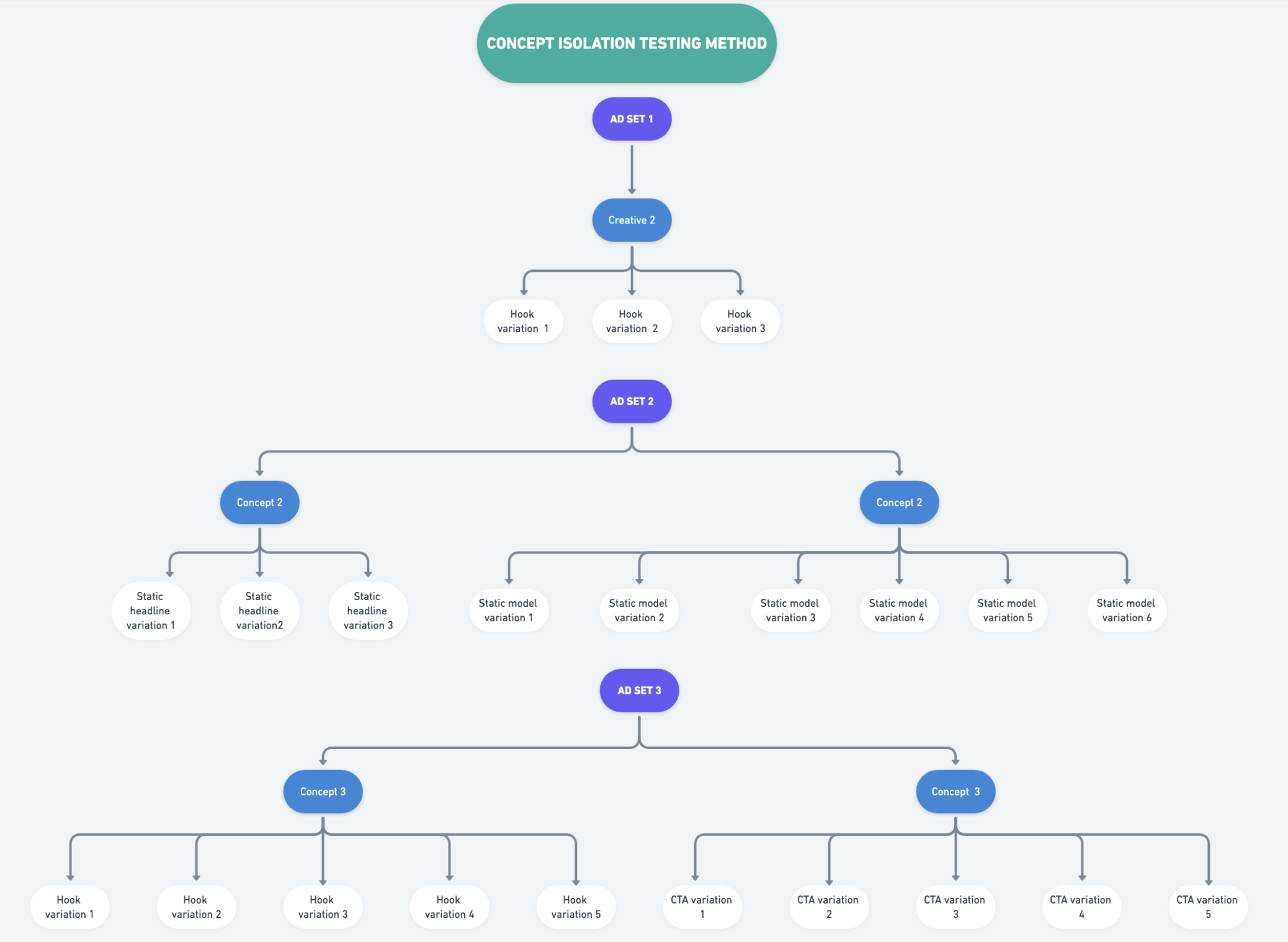

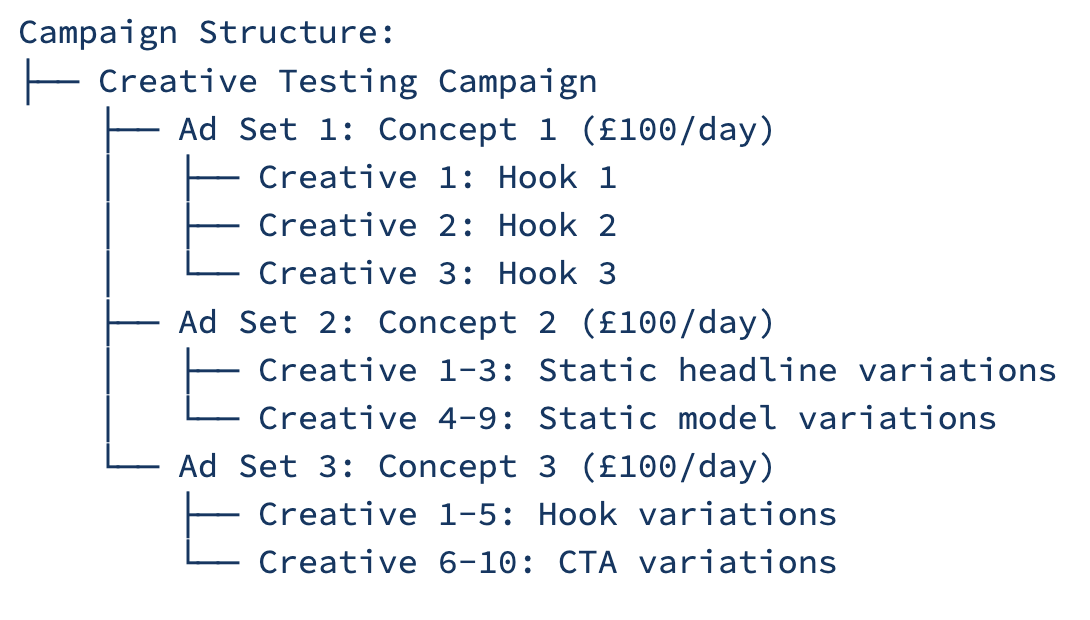

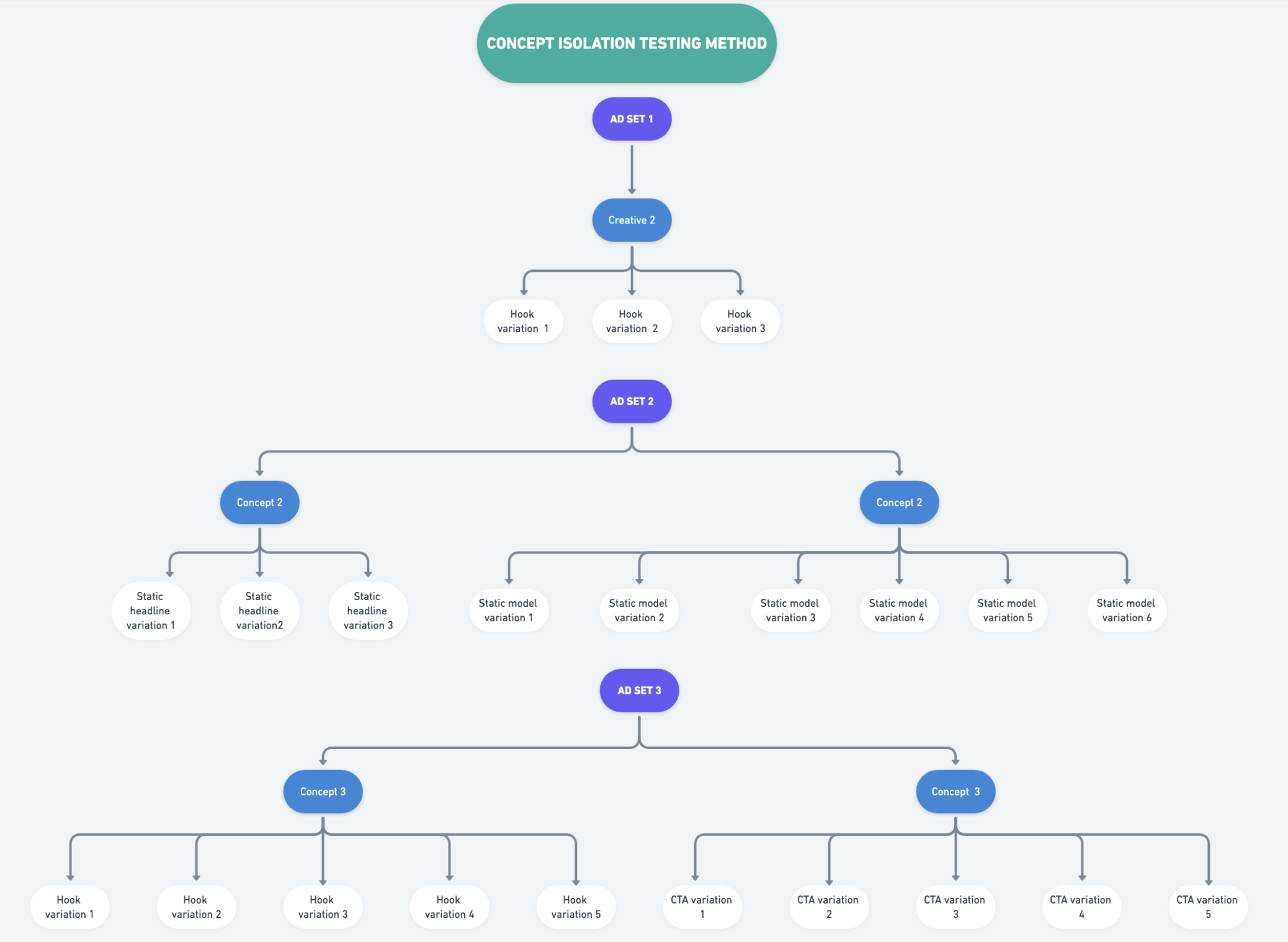

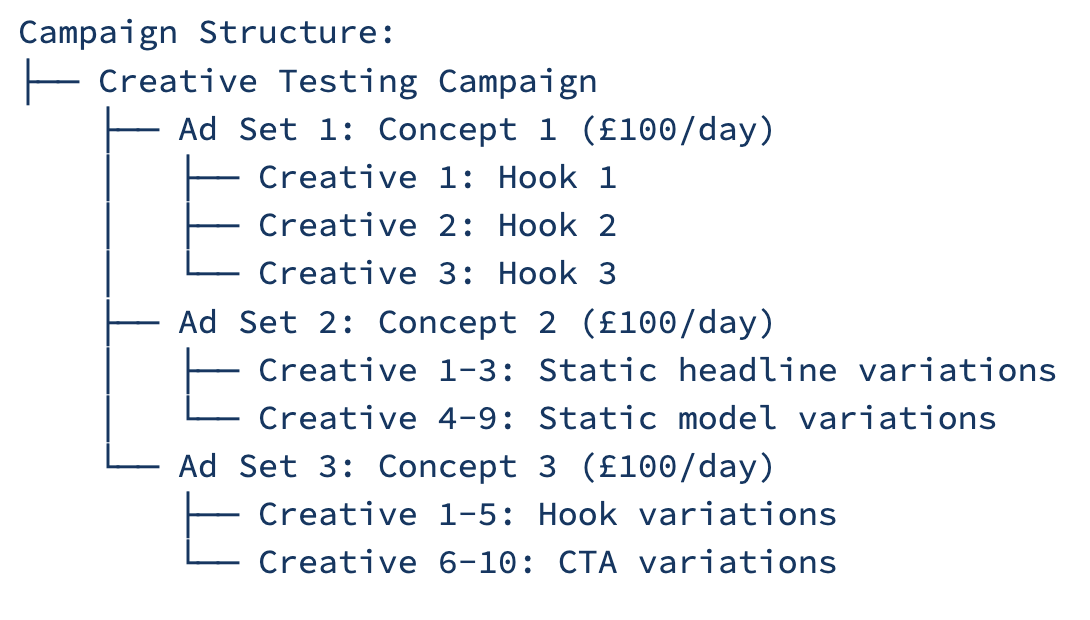

Dialled-in creative testing is the difference between “nice ads” and repeatable revenue. On Meta, the fastest path to truth is isolation - giving each idea, audience, or asset its own lane so the algorithm can hand you a clean verdict. This guide walks you through three battle-tested tiers, matched to the size of your testing budgets:

In a nutshell, concept isolation testing works by spinning up separate ad sets for each big idea e.g., “Sleep-better angle”, “Doctor-endorsed angle”, “Price-drop angle”. Each ad set contains 3-15 creatives that only vary micro-elements like hooks or CTAs, so the ad-set results cleanly tell you which concept drives demand.

When you have the fire-power to fund multiple learning funnels in parallel, the smartest first question isn’t necessarily “Which thumbnail?”—it’s “Which idea resonates?” Concept isolation testing answers that question quickest, protects large budgets from diffuse spending, and sets the stage for downstream other tests once you’ve crowned a hero concept.

Why it works for higher budgets

When to use

Structure: Concept-separated ad sets

Budget: AOV * 3+

Target: 50+ conversions per ad set weekly

Ad set creative volume: 3-15 creatives per concept

Cadence: 3-7 days

Testing volume: 50+ creatives a week

This example assumes a testing budget of ~£100k and an AOV of ~£30 meaning a £100 daily ad set budget is a sound approach. The approach is agile due to budget flexibility.

Simplified campaign structure

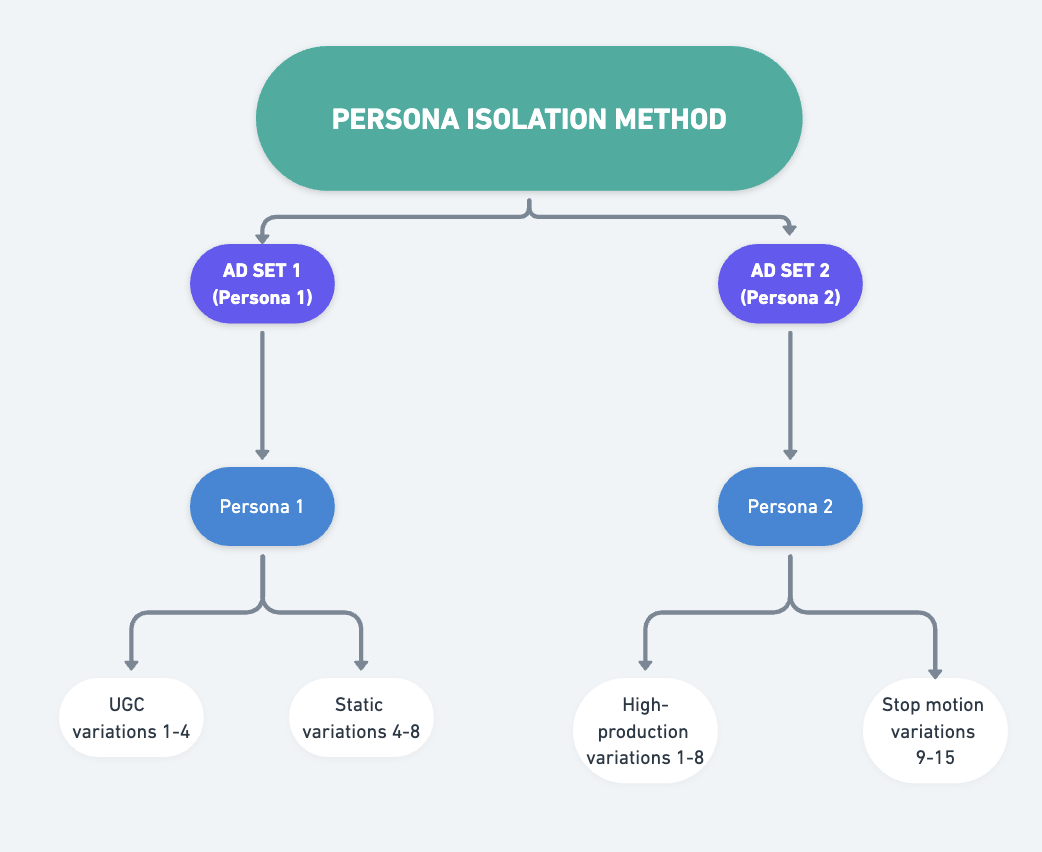

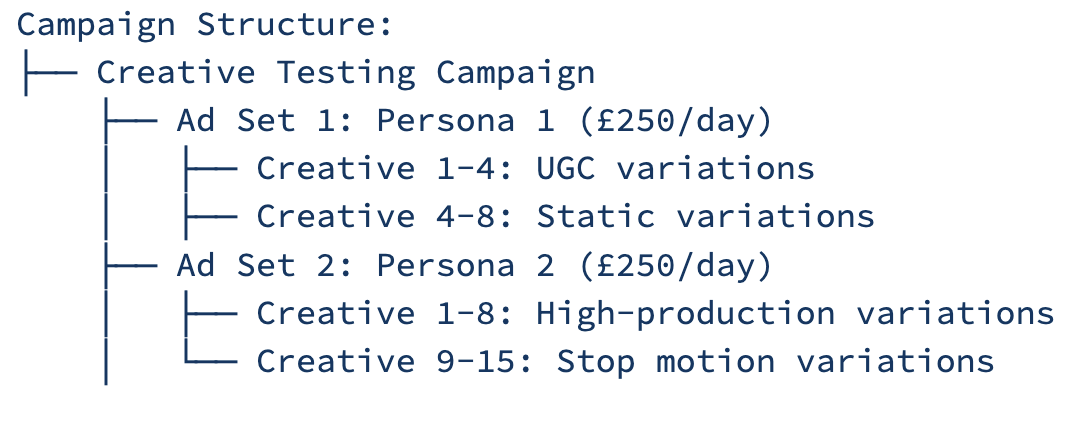

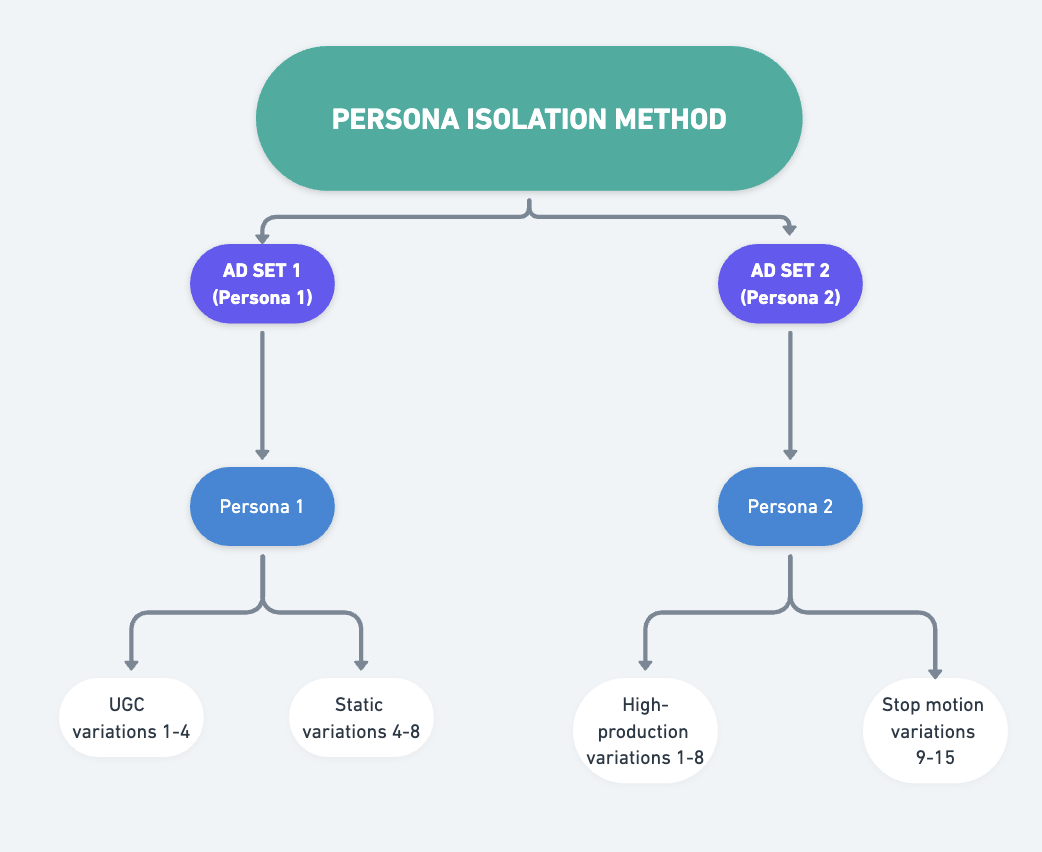

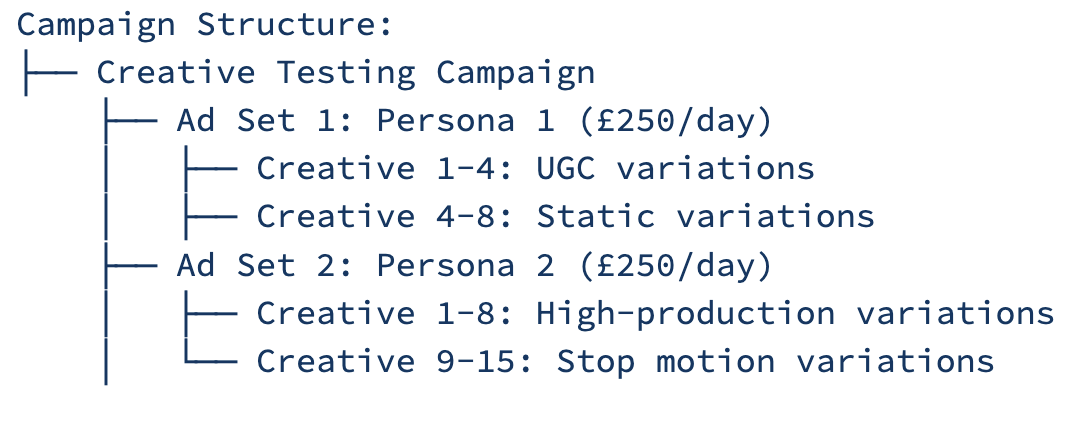

The persona isolation testing method works by instead of splitting by idea, you split by audience persona. One ad set contains all variations meant for “Persona 1”, the next for “Persona 2”, and so on. Inside each ad set you drop 8-20 creatives that speak to that group’s pain points and gather learnings from there.

The fastest road to profit isn’t looking for more winning concepts. It’s about spot-lighting and homing in on different mindsets that already make up the bulk of your revenue, then letting Meta’s algorithm optimise within those lanes. Persona isolation gives you the statistical power, clarity, and budget efficiency to do exactly that.

Why it fits mid-range budgets

When to use

Structure: Persona testing approach

Budget: AOV * 5+

Target: 25-50 conversions per ad set weekly

Ad set creative volume: 8-20 creatives per batch

Cadence: 7-10 days

Testing volume: 20+ creatives a week

How to structure the concept isolation testing campaigns

This example assumes a testing budget of ~£70k/monthly and an AOV of ~£50 meaning a £250 daily ad set budget is a sound approach. The approach is less agile as we need to adopt a more consolidated approach to maximise learnings and likelihood of budget ROI.

Simplified campaign structure

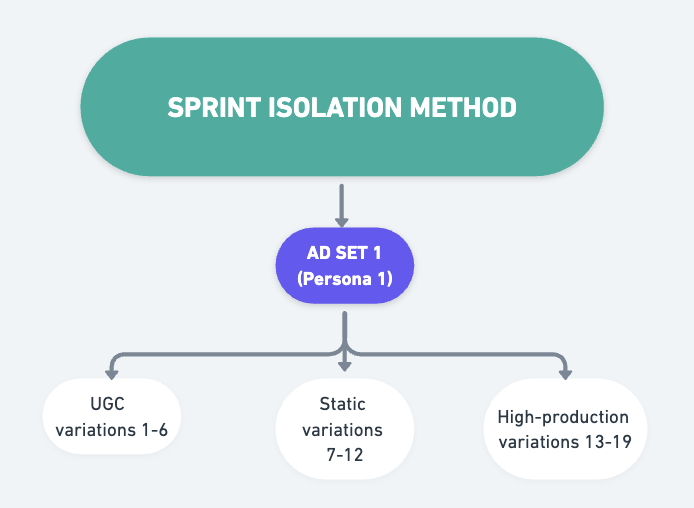

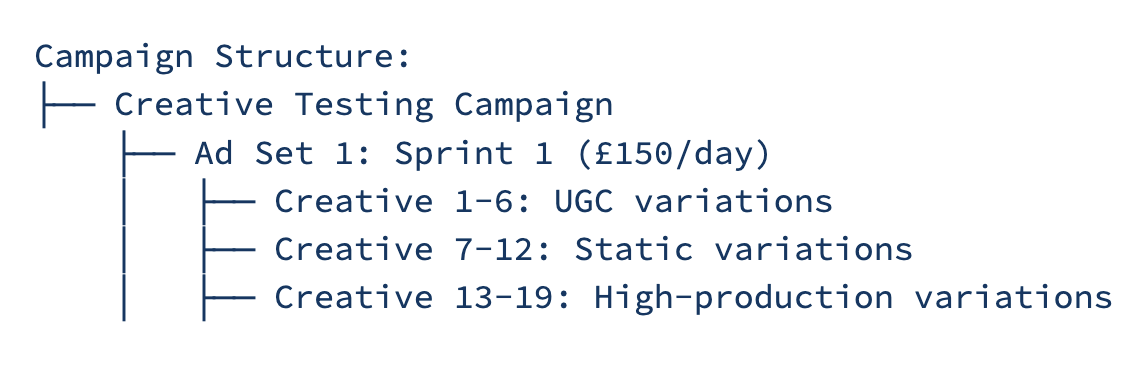

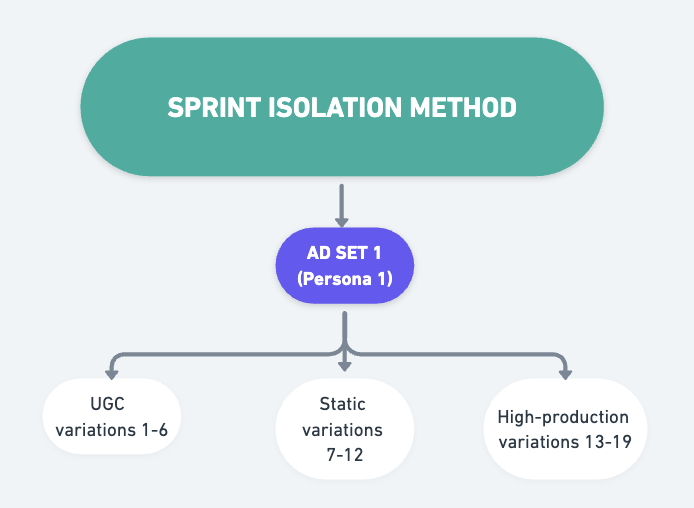

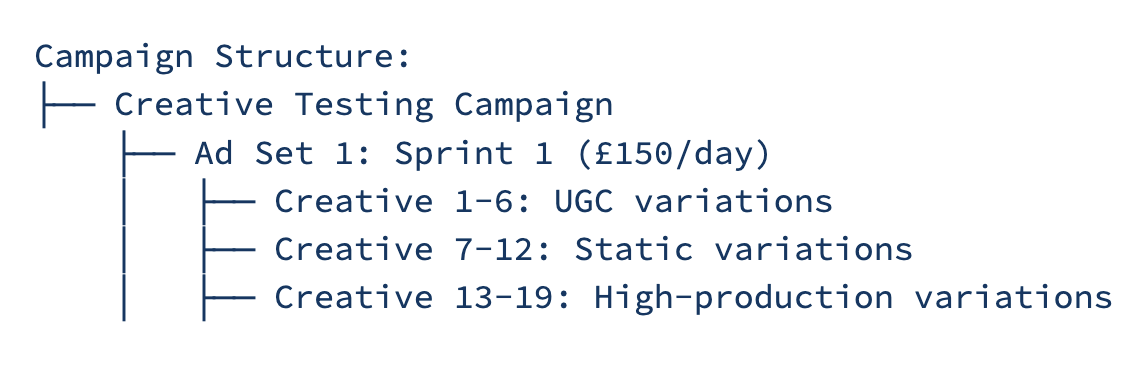

Sprint-isolation testing means dropping every new creative you want to evaluate into one single “sprint” ad set and letting Meta’s algorithm sort the winners for a fixed, short window.

Instead of carving budget across multiple ad sets (concept or persona isolation), you consolidate spend and conversions in one place, so Andromeda’s retrieval layer can gather statistically useful data on each ad without starving any of them.

When your monthly testing budget is under £30k (between £300-£1k per day), every pound must needs to work harder: it has to buy both learning and sales. Sprint isolation, running all new creatives in one highly-consolidated ad set for 10-14 days, is purpose-built for that reality.

Structure: Sprint based testing

Budget: AOV * 8+

Target: 15-25 conversions per sprint

Ad set creative volume: 10-14 creatives per batch

Cadence: 10-14 days

Testing volume: 10+ creatives a week

This example assumes a testing budget of ~£15k/monthly and an AOV of ~£50 meaning a £150 daily ad set budget is a sound approach. The approach is highly consolidated to ensure budget is going to high performers in the shortest possible time.

Simplified campaign structure

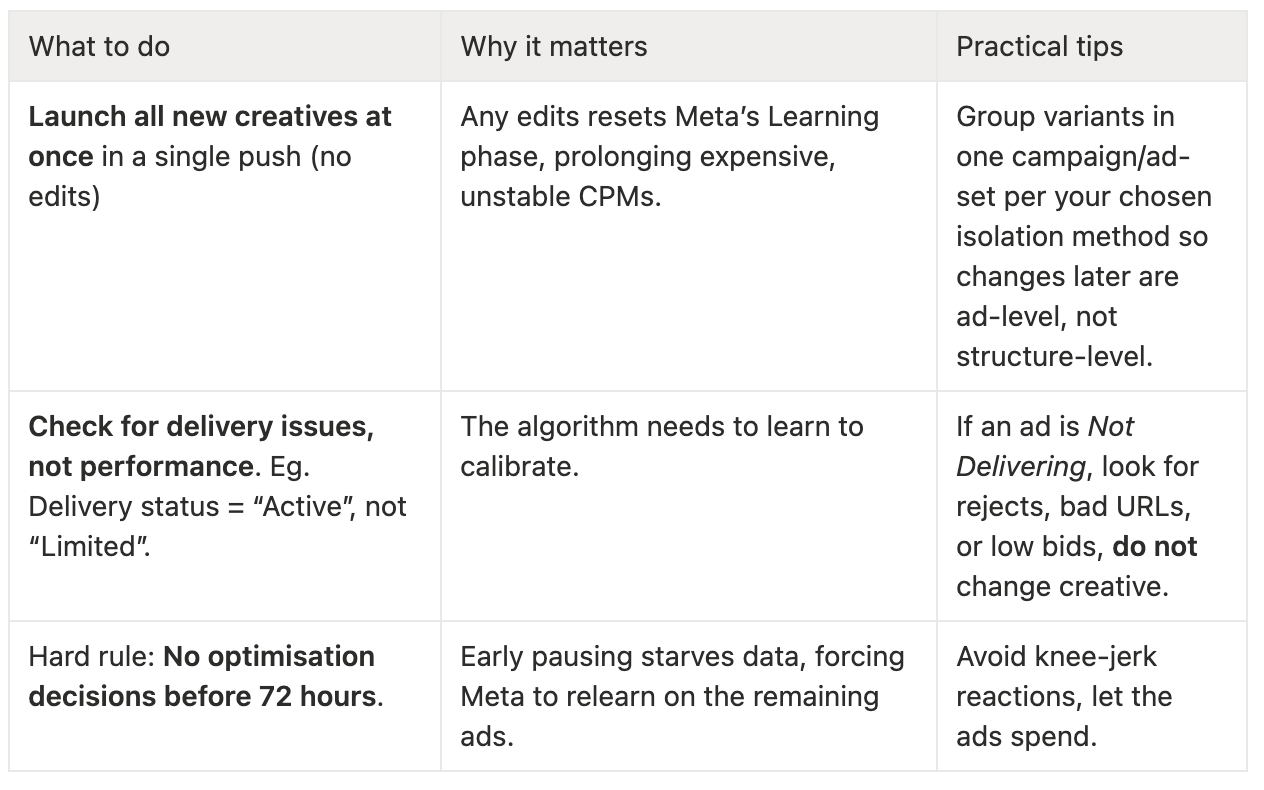

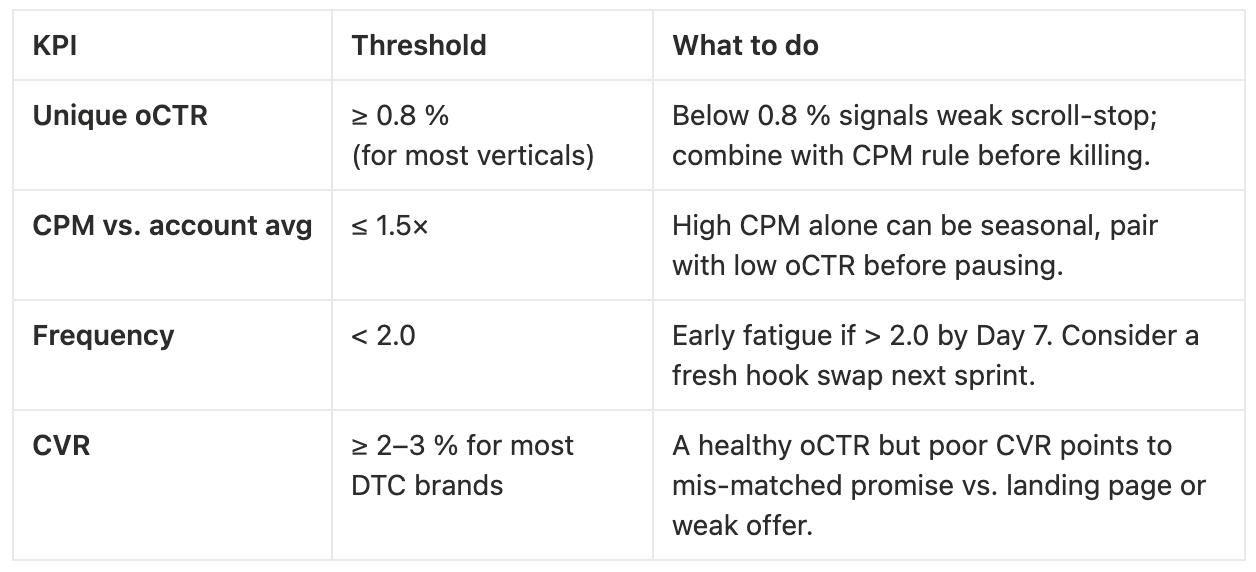

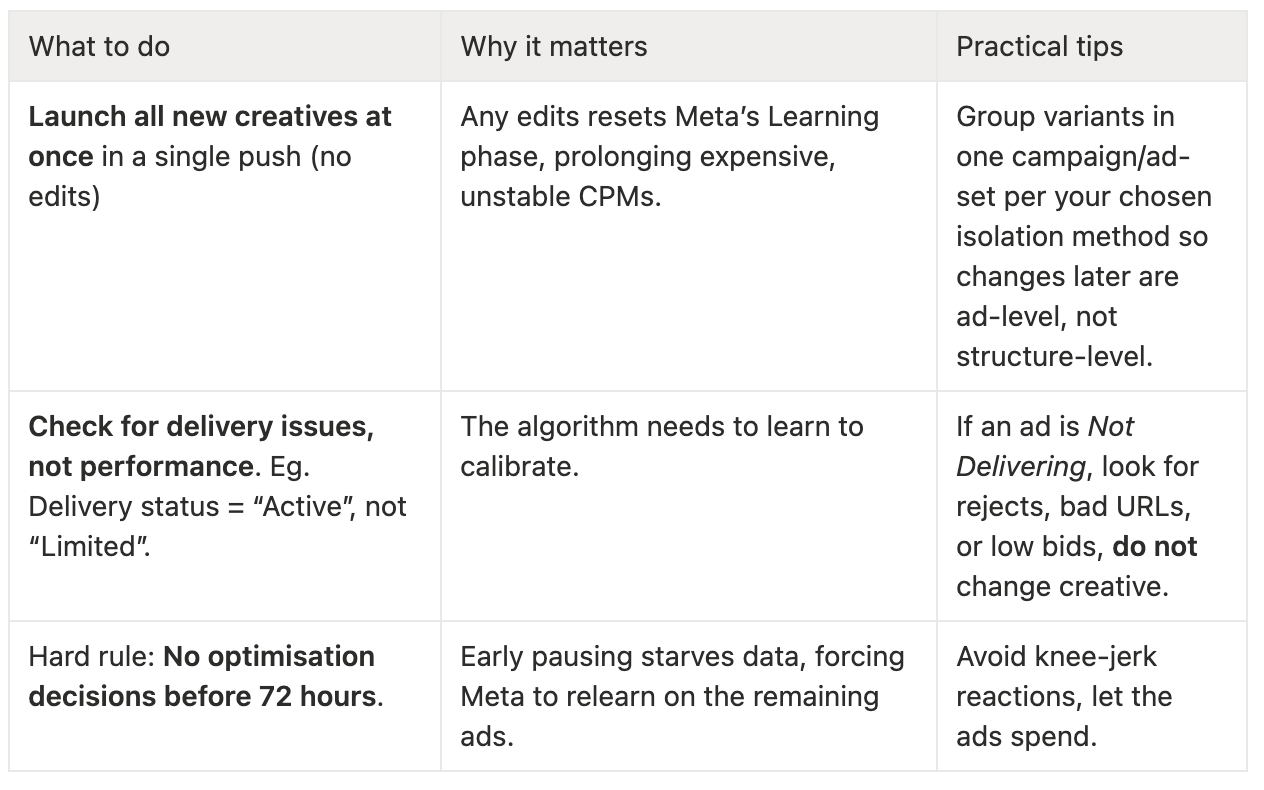

Launching a batch of fresh creatives is only half the battle, the real gains come from what you do in the first 30 days. Think of the process as a relay race with four clearly defined legs:

Following this cadence forces patience early, precision mid-stream, and aggression only when the numbers prove it. Stick to the guardrails below and you’ll turn chaotic launches into a disciplined scalable pipeline backed by data.

Actions

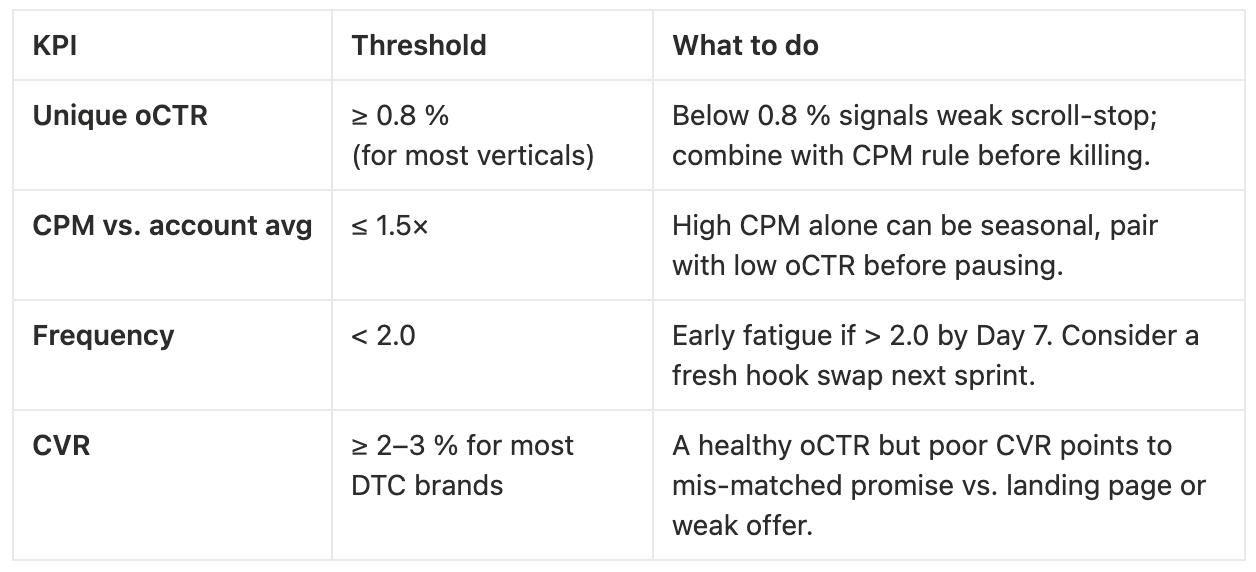

Primary question: Which ads deliver profitable conversions at scale?

KPITargetROAS≥ 100 % of goalCPA≤ target CPAConversion volume> 10 events

Optimisation moves

Scale indicators

Scaling method: IF high budget, scale horizontally and vertically. Continue to promote.

Disclaimer: If you’ve done the necessary checks and you have an outlying performer hitting the necessary performance benchmarks before this point - scale.

Before you start clicking pause or scale, anchor yourself to three principles that keep every decision objective and every pound productive.

This is how we’d suggest structuring budgets within your account.

High budget example (£50,000+/week)

In this example we have a higher proportion of ad spend allocated to existing proven ads due to account stability.

Lower budget example (£5,000/week)

In this example, we have a higher proportion of ad spend allocated to net new concept testing due to less stability and the need to allocate spend to the highest leverage point to find growth.

❌ Turning off ads too early (before 10 conversions)

❌ Testing too few creatives (volume is key in Andromeda)

❌ Ignoring learning phases (patience required)

❌ Over-optimising (let the algorithm learn)

❌ Budget fragmentation (ensure sufficient spend per test)

✅ Determine budget tier and testing methodology

✅ Set up proper campaign structure

✅ Prepare creative volume based on budget

✅ Establish optimisation schedule and rules

✅ Define success metrics and KPIs

✅ Create weekly review and scaling process

✅ Document learnings for future tests

You now have the framework to succeed in 2025: High-volume modular content, variable isolation, and structured campaign architecture.

But knowing how to test is one thing having the resources to produce, analyze, and iterate is another.

If you’re ready to move from manual guessing to a data-backed creative machine, we can help. We’ve generated over £400M in revenue for DTC brands by feeding Meta’s algorithm exactly what it wants: diverse, high-performing creative at scale.

Don’t let creative fatigue stall your growth.

Book a call and chat to us here

The rich text element allows you to create and format headings, paragraphs, blockquotes, images, and video all in one place instead of having to add and format them individually. Just double-click and easily create content.

A rich text element can be used with static or dynamic content. For static content, just drop it into any page and begin editing. For dynamic content, add a rich text field to any collection and then connect a rich text element to that field in the settings panel. Voila!

Headings, paragraphs, blockquotes, figures, images, and figure captions can all be styled after a class is added to the rich text element using the "When inside of" nested selector system.

Our creative systems have helped generate over £400M+ in revenue for DTC brands over the past few years. Over those years, staying agile has been one of the main constants. The Meta landscape has changed drastically over the past 5 years, and 2025 is no different.

The rules of paid social have changed - again.

Meta’s new Andromeda engine has replaced tidy, rule-based targeting with a hungry, probabilistic system that lives on fresh data and limitless creative options. It can trial 5,000 ads a week, not 50, which means the brands that win are the ones that feed it a steady diet of diverse, insight-driven assets - and they do it fast.

This guide distils everything you need to thrive in that reality.

Inside, you’ll learn how to generate strategic creative volume, test with surgical precision, and scale winners without wasting budget. Whether you’re running £500 a day or £50k, you’ll get concrete frameworks, checklists, and campaign architectures that turn chaos into disciplined experimentation.

Over the past few years, Meta has been shifting away from hands on in the account towards automation. From the early days of automation with CBOs, all the way through to their newest iteration of Advantage+ dubbed Meta Andromeda.

.png)

Meta’s Andromeda update has fundamentally shifted creative testing dynamics. It requires a strategic volume-based approach over traditional concept isolation, it can now support 5000 ads a week, rather than 50. The brands that understand this and cater to Andromeda’s needs will be the brands that win on Meta.

Ultimately, performance now depends on strategic volume, speed, and iteration of creative. You need to focus on creating a high volume of content that appeals to distinctly different audience personas and preferences.

“Soul-mate theory” is just shorthand for Andromeda’s goal: every ad impression should feel as if the advertiser crafted that exact image and line of copy specifically for the person viewing it, and the system now has the scale and smarts to try.

You should now operate under the theory that there is a perfect creative for each user. Instead of “Which audience fits this ad?”, the question becomes “Which specific ad version will resonate most with this single user right now?”

But what does that actually mean in this context?

The algorithm behaved like a rulebook.

If a user matched certain parameters (e.g., location, age, interest in “running shoes”), the system would follow a fixed chain of logic to choose which ad to show.

Every time the same inputs came in, it produced the same output.

This meant Meta was limited, it was:

The algorithm now behaves like a statistician + gambler + learner.

It doesn’t just look at hard rules; it weighs probabilities that a given ad will perform best based on vast, shifting signals: behaviour, history, context, creative, time of day, etc.

Two identical users might now see different ads, because the system is optimising based on the likelihood of engagement or conversion, not fixed logic.

This creates an algorithm that is:

Moving from deterministic to probabilistic means the ad-picking brain went from rigid “if-this-then-that” rules to a smart, ever-learning “best-guess” approach. Instead of guaranteeing the same ad sequence every time, it now plays the odds. Your customers get ads that feel more relevant, and you see better results, and the algorithm gets smarter.

Anyone who is involved with growing an e-com brand with Meta needs to understand this:

You need a high volume of distinctly different assets across all stages of awareness phases, underpinned by the psychological messaging frameworks that drive your core customers to convert. By doing this, you are more likely to serve the right ads, to the right people, at the right time.

When it comes to performance creative, “just make something that looks good” doesn’t cut it. Every ad you put out into the world is a chance to gather data - to learn what resonates, what converts, and what drives action etc. The best creatives aren’t just built to impress; they’re built to test. You should be dedicating 20% of your time to big swing concepts and 80% to making incremental progress through iteration.

Meta doesn’t just want more ads - it wants different ads.

When you design with testing in mind, you're not just guessing your way to a winning ad. You’re creating a clear path to get there faster using hypothesis-driven thinking, repeatable systems, and smart tweaks that let the data guide the next round.

With Meta’s Andromeda update, creative diversification is no longer optional. Ads that look too similar now get grouped and penalised - meaning that iteration must be about variation, not just minor tweaks

This section breaks down exactly what that looks like, including how to bake experimentation into the bones of your creative process.

Before you even think about shooting or designing anything, you should be thinking like a strategist.

Here’s the testing method we use:

1. Start with a hypothesis: e.g., “Urgency in the headline will lift CTR.”

2.Choose a creative baseline: Identify a top-performing ad concept to optimise. For example, a POV style video, before & after.

3. Isolate your variables: 3–6 key elements like the hook, creator, visuals, and format.

4. Run the test, track results, and log learnings: Capture what worked (and what didn’t) in your learnings tracker.

5: Diversify Beyond Similarity: Variations need to meaningfully differ in look, feel, or motivator - not just copy. Treat the first 3 seconds as a key variable as Meta uses this to determine similarity.

When we say "create with testing in mind," we mean that every decision you make, from headline to voiceover tone, should double as a potential test. What learnings can you gather from each creative decision that you make?

Your hook isn’t just the words. It’s the visual moment that defines whether your ad is treated as new. Meta now groups ads as ‘the same’ if the opening scene looks similar, even if the script changes. That means testing hooks must include visual swings - different settings, creators, and styles - not just different lines

Here’s an example of how tests used to be run…

This leaned into making small changes that focused on:

But these would all be flagged by Meta as looking too similar.

This is what Meta sees as differentiated - while they’re all static examples, the messaging and visuals are completely different.

Going forward:

Then there’s angle testing, essentially, the main story or focus of your ad. You can also dial deeper into pain point specificity. Take menopause, for instance, that’s a broad category. But zoom in, and you’ll find very different resonant messages: “brain fog,” “joint pain,” “wrinkles.”

These micro-pain points can each become their own testable angle, each appealing to a slightly different subset of your audience.

That granularity often unlocks a stronger emotional connection and audience segments you might not have known existed without testing.

Angle testing isn’t just about the story you tell - it’s about who you’re telling it to. Meta rewards creative built for different personas. That means pairing each angle with a distinct visual and tone, so the ad not only resonates with a new motivator but also qualifies as a unique concept

Once you’ve found a winning angle/messaging?

Take that top-performing messaging and angles, but change the format and visuals in a way that makes it VERY different and therefore delivers to a different individual who might like to consume in a different way than the original message was delivered.

For example…

One of the most effective tests you can run is on your offer positioning. Sometimes a small tweak in copy can completely change how compelling it feels.

You might test:

Here’s a bunch of offers you can test to see what resonates most with your audience.

Awareness-Level Messaging: Speak to Where They Are

People interact with your ad differently depending on how aware they are of their problem or your product. Your messaging should reflect that.

Let’s say we’re promoting a gut health supplement:

Visual Testing: Change the Frame

The words can stay the same, but the visuals? A major lever. Even subtle shifts in setting, model, or colour palette can make or break performance.

Test visuals like:

Every product has multiple value propositions, but which one resonates most?

Try framing like:

Also test how you present that social proof:

Creative diversification has shifted from a nice-to-have to a growth essential. Ads that look too similar - especially in the first 3 seconds - are grouped, capped, and penalised.

That means testing is no longer just about iterations on a theme, but about building genuinely different concepts that speak to new people.

Here’s how to diversify in practice:

To Wrap It Up...

Creative testing used to be about more ads. Today, it’s about different ads.

Scaling in Meta isn’t about guesswork or re-skinning winners anymore. It’s about building with diversification baked in:

The ads that scale longest and cost least are the ones Meta sees as unique concepts and your audience sees as personally relevant.

When planning briefs, ask yourself:

If you’re serious about building scalable, high-performing ads, your creative should be designed for it.

That means structuring, scripting and shooting content in a way that allows you to pull it apart, reassemble it, and test different combinations of hooks, bodies, and CTAs without having to reshoot everything from scratch.

In this section, we’ll break down how to approach creative architecture in a test-friendly way.

Your goal is to script and shoot in three interchangeable sections:

Each section should be written with 3–5 variations that hit the same angle from different emotional or messaging styles.

Let’s walk through how to approach each one in granular detail.

Hooks are arguably the number one most important part of an ad.

They are your scroll-stopper. And your ad should never rely on just one.

Instead, script hooks across different strategy types with different angles and formats.

For example:

🎬 Tip: Record hooks separately from the body. Keep them short (1–2 lines), and shoot with different tone options, or different creators which may resonate with different audiences.

Once you’ve got their attention, the body of the ad needs to deliver the story. But the key is: write and shoot the body in testable blocks, not one flowing monologue.

Each “block” should be:

Think of these as plug-and-play building bricks that can be reordered or swapped based on the hook or CTA.

A good CTA is not always “Buy now.” You need to match audience intent and awareness level…

Here’s a template shooting structure you can copy and adapt for any campaign

If you walk away with one insight from this section, let it be this:

Don’t build ads. Build toolkits.

The highest-performing brands don’t rely on a perfect 60-second ad. They build modular, flexible, testable creative systems.

Now you can too!

Dialled-in creative testing is the difference between “nice ads” and repeatable revenue. On Meta, the fastest path to truth is isolation - giving each idea, audience, or asset its own lane so the algorithm can hand you a clean verdict. This guide walks you through three battle-tested tiers, matched to the size of your testing budgets:

In a nutshell, concept isolation testing works by spinning up separate ad sets for each big idea e.g., “Sleep-better angle”, “Doctor-endorsed angle”, “Price-drop angle”. Each ad set contains 3-15 creatives that only vary micro-elements like hooks or CTAs, so the ad-set results cleanly tell you which concept drives demand.

When you have the fire-power to fund multiple learning funnels in parallel, the smartest first question isn’t necessarily “Which thumbnail?”—it’s “Which idea resonates?” Concept isolation testing answers that question quickest, protects large budgets from diffuse spending, and sets the stage for downstream other tests once you’ve crowned a hero concept.

Why it works for higher budgets

When to use

Structure: Concept-separated ad sets

Budget: AOV * 3+

Target: 50+ conversions per ad set weekly

Ad set creative volume: 3-15 creatives per concept

Cadence: 3-7 days

Testing volume: 50+ creatives a week

This example assumes a testing budget of ~£100k and an AOV of ~£30 meaning a £100 daily ad set budget is a sound approach. The approach is agile due to budget flexibility.

Simplified campaign structure

The persona isolation testing method works by instead of splitting by idea, you split by audience persona. One ad set contains all variations meant for “Persona 1”, the next for “Persona 2”, and so on. Inside each ad set you drop 8-20 creatives that speak to that group’s pain points and gather learnings from there.

The fastest road to profit isn’t looking for more winning concepts. It’s about spot-lighting and homing in on different mindsets that already make up the bulk of your revenue, then letting Meta’s algorithm optimise within those lanes. Persona isolation gives you the statistical power, clarity, and budget efficiency to do exactly that.

Why it fits mid-range budgets

When to use

Structure: Persona testing approach

Budget: AOV * 5+

Target: 25-50 conversions per ad set weekly

Ad set creative volume: 8-20 creatives per batch

Cadence: 7-10 days

Testing volume: 20+ creatives a week

How to structure the concept isolation testing campaigns

This example assumes a testing budget of ~£70k/monthly and an AOV of ~£50 meaning a £250 daily ad set budget is a sound approach. The approach is less agile as we need to adopt a more consolidated approach to maximise learnings and likelihood of budget ROI.

Simplified campaign structure

Sprint-isolation testing means dropping every new creative you want to evaluate into one single “sprint” ad set and letting Meta’s algorithm sort the winners for a fixed, short window.

Instead of carving budget across multiple ad sets (concept or persona isolation), you consolidate spend and conversions in one place, so Andromeda’s retrieval layer can gather statistically useful data on each ad without starving any of them.

When your monthly testing budget is under £30k (between £300-£1k per day), every pound must needs to work harder: it has to buy both learning and sales. Sprint isolation, running all new creatives in one highly-consolidated ad set for 10-14 days, is purpose-built for that reality.

Structure: Sprint based testing

Budget: AOV * 8+

Target: 15-25 conversions per sprint

Ad set creative volume: 10-14 creatives per batch

Cadence: 10-14 days

Testing volume: 10+ creatives a week

This example assumes a testing budget of ~£15k/monthly and an AOV of ~£50 meaning a £150 daily ad set budget is a sound approach. The approach is highly consolidated to ensure budget is going to high performers in the shortest possible time.

Simplified campaign structure

Launching a batch of fresh creatives is only half the battle, the real gains come from what you do in the first 30 days. Think of the process as a relay race with four clearly defined legs:

Following this cadence forces patience early, precision mid-stream, and aggression only when the numbers prove it. Stick to the guardrails below and you’ll turn chaotic launches into a disciplined scalable pipeline backed by data.

Actions

Primary question: Which ads deliver profitable conversions at scale?

KPITargetROAS≥ 100 % of goalCPA≤ target CPAConversion volume> 10 events

Optimisation moves

Scale indicators

Scaling method: IF high budget, scale horizontally and vertically. Continue to promote.

Disclaimer: If you’ve done the necessary checks and you have an outlying performer hitting the necessary performance benchmarks before this point - scale.

Before you start clicking pause or scale, anchor yourself to three principles that keep every decision objective and every pound productive.

This is how we’d suggest structuring budgets within your account.

High budget example (£50,000+/week)

In this example we have a higher proportion of ad spend allocated to existing proven ads due to account stability.

Lower budget example (£5,000/week)

In this example, we have a higher proportion of ad spend allocated to net new concept testing due to less stability and the need to allocate spend to the highest leverage point to find growth.

❌ Turning off ads too early (before 10 conversions)

❌ Testing too few creatives (volume is key in Andromeda)

❌ Ignoring learning phases (patience required)

❌ Over-optimising (let the algorithm learn)

❌ Budget fragmentation (ensure sufficient spend per test)

✅ Determine budget tier and testing methodology

✅ Set up proper campaign structure

✅ Prepare creative volume based on budget

✅ Establish optimisation schedule and rules

✅ Define success metrics and KPIs

✅ Create weekly review and scaling process

✅ Document learnings for future tests

You now have the framework to succeed in 2025: High-volume modular content, variable isolation, and structured campaign architecture.

But knowing how to test is one thing having the resources to produce, analyze, and iterate is another.

If you’re ready to move from manual guessing to a data-backed creative machine, we can help. We’ve generated over £400M in revenue for DTC brands by feeding Meta’s algorithm exactly what it wants: diverse, high-performing creative at scale.

Don’t let creative fatigue stall your growth.

Book a call and chat to us here

The rich text element allows you to create and format headings, paragraphs, blockquotes, images, and video all in one place instead of having to add and format them individually. Just double-click and easily create content.

A rich text element can be used with static or dynamic content. For static content, just drop it into any page and begin editing. For dynamic content, add a rich text field to any collection and then connect a rich text element to that field in the settings panel. Voila!

Headings, paragraphs, blockquotes, figures, images, and figure captions can all be styled after a class is added to the rich text element using the "When inside of" nested selector system.